Due: Thursday Feb 28, 11:59PM EST

100 points total

Overview

In this assignment you will write shared-address space (using OpenMP) and message passing (using MPI) programs that solve the Wandering Salesperson problem. You will first implement your programs on the multi-core machines in the GHC clusters. Once you've achieved good scaling on these small core count machines, you'll be allowed to run your code on the Blacklight supercomputer at the Pittsburgh Supercomputing Center.

Wandering Salesman Problem

The wandering salesman problem is a variant of a well known NP-hard problem: given the distances between each of $n$ cities, find the shortest path that starts at one city and visits all other cites exactly once. (The path does not need to return to the start city.)

The input to the problem is given in the form of a matrix. An element of the matrix, d[i][j] gives the distance between city i and city j. Specfically, the input to your program is a file organized as follows:

N

d[1][2]

d[1][3] d[2][3]

d[1][4] d[2][4] d[3][4]

.

.

.

d[1][N] d[2][N] d[3][N] ... d[N-1][N]

where N is the number of cities, and d[i][j] is an integer giving the distance between cities i and j. The program mkinput.py included in the starter code can be used to generate a random input in the proper format. The output from the program should be an ordered list of cities (numbers between 1 and N) describing the shortest path. Note that the three-city (n=3) orderings (1 -> 2 -> 3 and 3 -> 2 -> 1) correspond to the same three-city path, it is just traversed in a different direction. Your programs can emit either ordering as a solution.

Branch-and-Bound Solutions to Combinatorial Optimization Problems

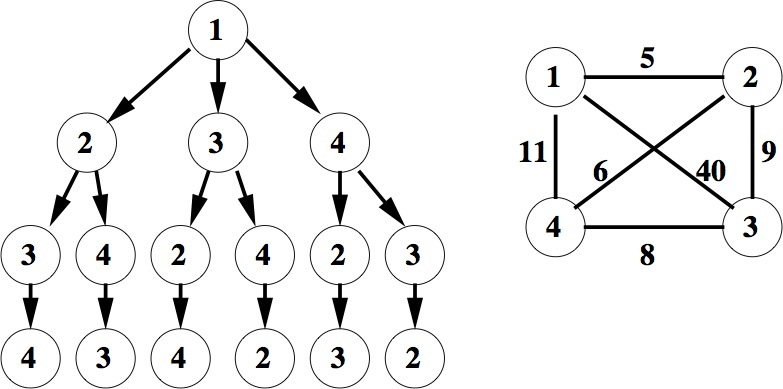

First, consider a simple brute-force solution to the the WSP that evaluates the cost of all paths. One way to think about evaluating every possible route from city 1 is to construct a tree as shown in the figure below (n=4 in the example).

For this WSP:

d[1][2] = 5

d[1][3] = 40

d[1][4] = 11

d[2][3] = 9

d[2][4] = 6

d[3][4] = 8

Note how in Figure 1, each path from the root to a leaf of the tree constitutes a valid wandering salesperson path that visits all cities. There are six unique root-to-leaf paths in this tree. For example, the route 1 -> 3 -> 4 -> 2 is a valid path and has a distance of $40+8+6 = 54$.

A simple brute-force solution to the WSP is to construct trees rooted by each of the n possible starting cities, and then, for each tree, compute the cost of all leaf paths via traversal from the root to each leaf. The path with the smallest distance is the answer to the WSP problem. (Note that this brute-force solution tries each unique path twice -- in "forward" and "backward" order.) A more work-efficient way to traverse each tree is to do it recursively in depth-first order, incrementally keeping track of the current path length from the root. This depth-first traversal order avoids the need to recompute path lengths for common prefixes.

The branch-and-bound approach follows the depth-first traversal order, but with some added intelligence. It uses more knowledge of the problem to prune subtrees so that less overall evaluation is necessary. The basic approach works as follows:

- Evaluate one route of the tree in its entirety, (say

1 -> 2 -> 3 -> 4) and determine the distance of that path. Call this distance the currentboundof the problem. The bound for this path in the above tree is $5 + 9 + 8 = 22$. You can think of 'bound' as the length of the best path found so far. - Next, suppose that a second path is partially evaluated, say path

1 -> 3, and the partial distance, $40$, is already greater than thebound. This implies there is no need to complete the traversal of the subtree rooted by the current path because all of those possible routes (in this case there are two) must have a total distance greater than the bound. In this way the subtree is pruned and therefore does not require evalution. - Whenever any route is discovered that has a shorter total distance than the current bound, then the bound is updated to this new value. As the program continues, the bound tightens, the more aggresive pruning is possible.

In summary, a branch-and-bound approach always remembers the best path it has found so far, and uses that to prevent useless search down parts of the tree that can not possibly produce shorter routes. For larger trees, this could result in the removal of many possible evaluations.

Program 1: Shared memory solution with OpenMP (40 pts)

OpenMP is an API and set of C-language extensions that provides compiler support for parallelism. It is well documented online, but here is a brief example:

/* Serial code here ... */

#pragma omp parallel

{

/* This block is potentially executed in parallel on multiple cores */

}

/* Wait until all threads finish before continuing */

/* Serial code again ... */

Note: We will be using omplace on blacklight and the environment variable OMP_NUM_THREADS on the ghc machines to control the number of threads used in the parallel regions. You can run programs yourself with different numbers of thread via setting the environment variable OMP_NUM_THREADS to the desired number of threads and running your program. You can do this in one line in bash with the following on ghc:

OMP_NUM_THREADS=4 ./my_openmp_program

which is equivalent to the following on blacklight (note that omplace also pins different threads to different cores):

omplace -nt 4 ./my_openmp_program

You can also use OpenMP to tell the compiler to parallelize iterations of for loops, and to manage mutual exclusion.

/* The iterations of the for loop may be parallelized */

#pragma omp parallel for

for (int i = 0; i < 100; i++) {

#pragma omp critical

{

/* This block will be executed by at most one thread at a time. */

printf("Thread %d got iteration %lu\n", omp_get_thread_num(), i);

}

}

As a participant in a 400-level course, we expect you to be able to read OpenMP documentation on your own, but you here are some useful links to get started:

- The OpenMP 3.0 specification: http://www.openmp.org/mp-documents/spec30.pdf.

- An OpenMP cheat sheet http://openmp.org/mp-documents/OpenMP3.1-CCard.pdf.

Note: make sure that you are reading the API that corresponds to the version of gcc you are using. According to the gcc openmp page: As of GCC 4.2, the compiler implements version 2.5 of the OpenMP standard and as of 4.4 it implements version 3.0 of the OpenMP standard. The OpenMP 3.1 is supported since GCC 4.7.

Program 2: Message passing with Open MPI (40 pts)

Open MPI is a message passing API used frequently in scientific computing. Here is a brief example:

int main(int argc, char** argv) {

int size; /* the number of threads */

int rank; /* the id of the current thread */

int value;

MPI_Init(&argc;, &argv;);

MPI_Comm_rank(MPI_COMM_WORLD, &rank;);

MPI_Comm_size(MPI_COMM_WORLD, &size;);

if (rank == 0) {

for (int i = 1; i < size; i++) {

value = i*i;

printf("Thread 0 performing a blocking send of %d to process %d\n", num, i);

MPI_Send(&value;, 1, MPI_INT, i, MY_MSG_TAG, MPI_COMM_WORLD);

}

} else {

MPI_Recv(&value;, 1, MPI_INT, 0, MY_MSG_TAG, MPI_COMM_WORLD, NULL);

printf("Finished a blocking receive of %d from process 0\n", num);

}

MPI_Finalize();

}

This would be launched with the command mpirun on both gates and blacklight, which can control the number of processors used:

mpirun -np 4 ./my_mpi_program

Again, we expect you to learn MPI on your own, but here are some links to get started:

- This article may be useful.

- A tutorial with some example code: http://www.lam-mpi.org/tutorials/nd/.

- An explanation and reference https://computing.llnl.gov/tutorials/mpi/.

- Some official reference material http://www.open-mpi.org/doc/v1.6/.

What you need to do (Please read this section carefully.)

Download the starter code at /afs/cs.cmu.edu/academic/class/15418-s13/assignments/asst3.tgz.

We have given you starter code for two serial solutions to the wandering salesman problem. (One solution is written in OpenMP and the other in Open MPI.)

You need to improve both programs to solve the problems in parallel and achieve good scaling. Your goal is to achieve as close to close-to-linear speedup as possible for both programs on the six core GHC 3K machines and on up to 128 cores on Blacklight. Note that due to Hyper-threading, the GHC machines report themselves as having 12 virtual cores. (You'll need to think about this in your analysis.)

Then, produce a brief report describing and analyzing your solution. Your report should include the following items for each of the two programs you write:

- A thorough description of how your program works. Describe the general program flow and all significant data structures. How is the problem decomposed? How is the work assigned to threads? Where is the communication and synchronization? What were your initial solutions and how did you arrive at your final solution? Your writeup should include a description of your final algorithm and experimentation that is sufficiently detailed so that we are convinced you know how it works and so that we do not have to read your source code to understand what you did and how you did it.

- Execution time and speedup for

input/distancesfor 1-12 virtual cores on the GHC 3000 machines, and 1, 2, 4, 8, 16, 32, 64, 128 processors on Blacklight. Please include graphs. - Discuss the results you expected and explain the reasons for any non-ideal behavior you observe. In particular, does your program achieve perfect speedup using six threads on the GHC 3000 machines? Does it achieve 12X speedup using 12 threads? (Do you expect it to? Why or why not?) If your program does not achieve perfect speedup, please explain why: Is it due to work imbalance? Communication/synchronization overhead? Is the code performing redundant (or unnecessary) work? Is it possible to achieve better than perfect speedup? Provide measurements to back up your explanations.

Grading

- The performance (scaling) of your OpenMP implementation is worth 40 points.

- The performance (scaling) of your MPI implementation is worth 40 points.

- Your writeup is worth 20 points.

If you want to modify the serial implementation or anything else in the starter code, feel free to do so (you might want to bounce your idea off the staff before doing so), but you need to report your speedup relative to the improved serial implementation. Remember, the point of this assignment is scaling and parallelism - we care far less about the runtime than we care about the speedup. We expect your code to demonstrate near perfect scaling on the GHC 3000 machines. We will provide guidance on performance expectations on Blacklight shortly, once we see how the early starters are doing. If you start late, you probably will not be able to get full credit because it can take a long time to run jobs on Blacklight. You will not have significant opporunity to tune your code on Blacklight.

We expect that, much like in Assignment 2, you will ultimately try a number of solutions. We expect your write-up to contain detailed description of this optimization process. Remember, the point of 15-418 assignments is for you to learn how to reason about, write, debug, and profile a parallel program in order achieve the best speedup you can. If you acheive a good speedup and have a clear writeup that conveys an interesting experimentation process, you should expect to receive a good grade.

To help you measure your performance, we have created a script that will run your code on a few random datasets and upload the results to a public scoreboard on our server. Run ./timer.bash to time your code and submit your time to the server (note that timer.bash does not check your code for correctness). See the scoreboard here. (Note, the scoreboard is This is currently still in development but should be fairly stable at this point. Note: This is not an autolab scoreboard like in 213. We realize it is very easy for you to fake your results or generally disrupt the presentation of the results. We ask that you please don't do this so the TAs can spend more time helping you rather than cleaning up the website. The scoreboard was implemented for fun, so you can see how you are doing against the rest of the class.

Some things to keep in mind

- You probably want to start by looking at

src/wsp.cppbut you can modify any file you need to (hint - you might need to modifysrc/wsp_serial.cppas well). - You can test correctness by comparing the output of your parallel code to the output the starter code. Try testing small examples before working on large problems.

- A solution that works naturally for OpenMP might not be easy for Open MPI.

- Specifically tuning your program for one dataset is not an interesting solution to this problem. In fact performance on one input can be misleading due to the nature of branch-and-bound problems, since a different order of exploration might find a short path quicker, leading to less work. We are looking for good performance on average across different workloads. This is why we gave you

mkinput.py, a tool that generates random workloads. - Consider the trade-offs between fine grained synchronization and performing unecessary extra work.

- To use Blacklight, you will submit your programs into a job queue. The Blacklight queue scheduler will then run on a subset of processors on the machine. Blacklight's queue can have long wait times - last year's wait times were on the order of hours. We highly recommend you spend the earlier stages of the project debugging on the GHC 3K machines first before trying to scale up.

- The article How to use Blacklight may be useful to look at.

- Be careful about variable scoping when you are using OpenMP.

- Profilers are powerful tools to help you understand the performance of your programs.

Environment Setup

For this assignment you will be using the GHC 3000 machines and Blacklight, a 4096-core supercomputer hosted at the Pittsburgh Supercomputing Center (PSC). You will first get your code running well on the 6-core (12-virtual cores, due to Hyper-threading) machines in GHC 3000. (Note you can develop and test your code on any machine, but performance should be reported on the GHC 3000 machines. Once your code scales well on these machines, you'll have the opportunity to test and improve its scalability on a supercomputer.

GHC 3000 Setup Instructions

The Gates 3000 machines are ghc27-50.ghc.andrew.cmu.edu. Also, you can verify that you are on a correct machines by running 'less /proc/cpuinfo' and confirming that Linux reports 12 virtual cores.

The six-core, 3.2 GHz Intel Xeon CPU in these machines is described in detail here.

On the GHC machines, add /usr/lib64/openmpi/bin to your PATH and /usr/lib64/openmpi/lib to your LD_LIBRARY_PATH environment variables. You should use the GHC machines for the initial debugging and testing of your code. Note: as of right now, MPI is not available on ghc46, ghc49, or ghc75. Computing services should have this fixed shortly.

Blacklight Setup Instructions

You need to first create an account on XSEDE and then tell the course staff. Once you have created an account, fill out this form so we know your Blacklight username and can give you access to use the class quota. It might take us a few days to give you access, so create your account early! If you wait until the last minute you might not get access in time. You will also need to run module load openmpi/1.6/gnu (see below for more details).

There are two ways to access Blacklight to run your code. The first is through the XSEDE web portal. You will need to login into your XSEDE account at http://portal.xsede.org. Once logged in, navigate to "MY XSEDE", then "SSH Terminal" and select "Blacklight" in the dropdown menu to initiate an SSH session. The second is via ssh to blacklight.psc.teragrid.org (we expect that the latter option will be far more convenient). To login via ssh you may first need to change your Blacklight password by following the instructions found here: http://www.psc.edu/index.php/computing-resources/blacklight#password.

Running on Blacklight

Running on Blacklight presents its own unique set of issues. You should read the available information on Blacklight and do your best to search for answers on your own. The blacklight information page is a good place to start. Here are some tips and tricks to help you out:

To build

wspwith MPI, you need to first set all the proper envirnoment variables. Blacklight uses themodulesystem to make this easy, so you just need to run:module load openmpi/1.6/gnuYou might want to add this to your

.bashrcor equivalent.We have given you starter batch job files for both programs. To submit a job to the Blacklight batch queue:

qsub wsp.job

Note: we are intentionally not giving you debug job files because you should debug your code on the GHC machines. If you want to run timer.bash, you should modify wsp.job to call it (you might want to modify the OpenMP timer.bash to use omplace if you do so).

Hand-in Instructions

Hand-in directories have been created at:

/afs/cs.cmu.edu/academic/class/15418-s13/handin/asst3/<ANDREW ID>/

Remember: You need to execute aklog cs.cmu.edu to avoid permission issues.

- Please submit your writeup as pdf file named

writeup.pdf. Note - while you must hand in your code, your writeup should include a description of your final algorithm and experimentation that is sufficiently detailed so that we are convinced you know how it works and so that we do not have to read your source code to understand what you did. - Please submit your code in the file

handin.tar.gz. This tarball should be a complete copy of yourasst3/directory. To keep submission sizes small, please do amake verycleanprior to creating the archive, and remove any residual output, etc. When handed in, all code must be compilable and runnable! We should be able to simply untarhandin.tar.gz, make, then execute your programs without manual intervention.

Our grading scripts will rerun the checker code allowing us to verify your score matches what you submitted in the writeup.pdf. We will also run your code on other datasets to further examine its correctness.