We will discuss non atomic bus transactions in this article, the issues that arise from their use, and their benefits.

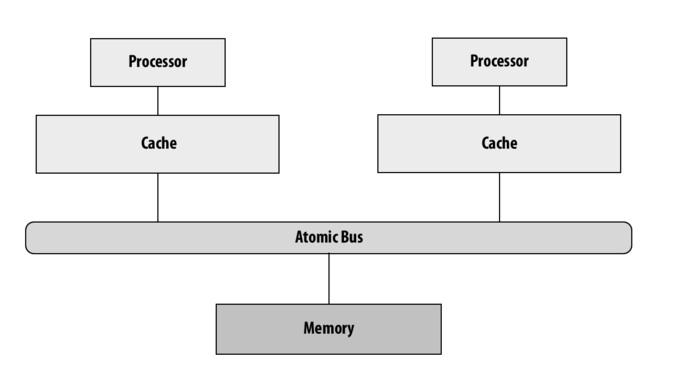

An atomic bus transaction follows the pattern of a client placing a command on the bus, and then another client places a response command on the bus. This all occurs uninterrupted.

Request using an atomic bus:

- Processor P1 gains access to the bus and puts the request on the bus.

- Memory reads and processes request, then sends the response.

- Bus sits idle while memory processes the request and sends the response, which could take many clock cycles.

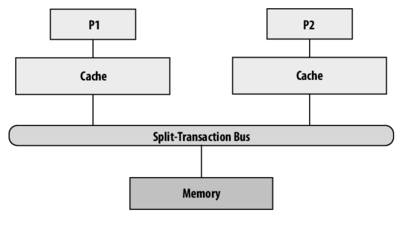

To deal with this inefficiency, transactions are split up. The request and the response are no longer grouped under one atomic execution of events. We can do this using a split-transaction bus:

Request using a split-transaction bus:

- Caches request the arbiter for access to the bus.

- A cache is granted access to the bus and the cache sends the request on the bus.

- The bus is now free for further requests and can take the next request.

- When memory finishes processing a request and has data ready, it sends the response on the bus, independent from the protocol handling requests.

A split-transaction bus can be thought of as having two distinct bus lines. One line for requests and a separate one for responses. Each of these will act atomically with respect to requests or responses.

Issues that come up as a result of this design:

Matching requests and responses

Since requests and responses are not combined into an atomic execution, any number of separate requests and responses can occur between a given request and response. So when a response is seen, there needs to be some way to match that back to the appropriate request. This is done via a request table. For a given request the arbiter assigns the request a tag and places the address and command information of the request into a table, indexing by the tag.

Conflicting requests

Since requests can be dealing with memory and caches it is possible that this can lead to non consistent state. To deal with this, conflicting requests are not allowed. This is achievable because each cache has a copy of the request table.

Flow control

Caches and memory maintain buffers for receiving data off the bus. Since these are finite, they could fill up. If the buffer needs to be written to while full during some request or response, a negative acknowledgement (NACK) is used. This will lead to a retry at a later point.

Reporting snoop results

It is unclear when the snoop results are reported. Should they be reported during the request or during the response?

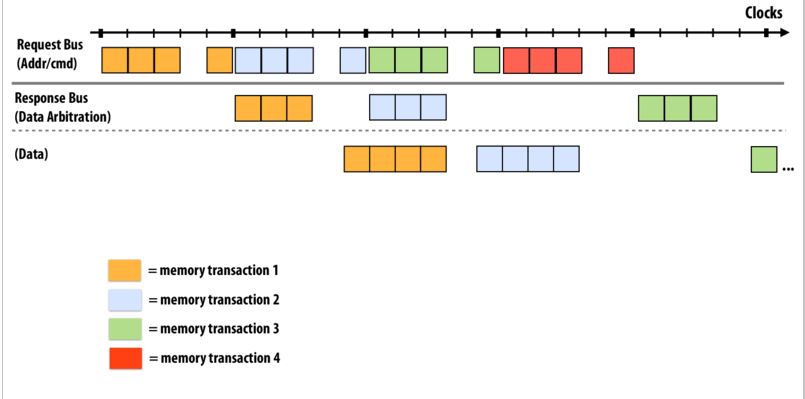

A Basic Design of Split-Transaction Bus:

There can be up to 8 outstanding requests at a time over the whole system. Responses do not have to be in the same order as the requests, but the order of the requests establishes the total order of the system. When a buffer is full, the client can NACK a transaction, leading to a retry.

Read miss: cycle by cycle bus behavior:

PHASE 1:

A request made by a cache upon a read miss can be divided into roughly 5 stages:

1) Request Arbitration: Cache controllers present requests to bus arbiter. 2) Request Resolution: Bus arbiter grants access to a requester. All caches allocate space in the request table for the new entry. Special arbitration lines indicate what the request tag is. 3) Bus winner places command/address requested on the bus. 4) Caches perform snoop. They look up tags, caches states etc., basically checking if the current request concerns them at all. NO BUS ACTIVITY HAPPENS HERE. 5) Caches acknowledge that they have snoop result ready, or that they cound't finish in time

PHASE 2:

The response data arbitration can be divided into the following 3 stages:

1) Responder presents intend to respond to request with tag T. 2) Data bus arbter grants access to one responder. 3) Original requestor signals if it is ready to receive response. In case it is not because it is busy, it can respond with a NACK.

PHASE 3:

If the requestor says that it it is ready to receive data, the responder places data on the bus. The other caches present snoop results for request with the data and the request entry is freed in the tables for all caches.

You can think of these three phases as runnin on different busses or different parts of the bus. Hence, although one particular request has to follow these phases in a serial fashion, they can be done in parallel for different requests. For example, we could do phase 1 for request 3, phase 2 for request 2 and phase 3 for request 1 in parallel. This gives us the ability to pipeline these operations.

Questions:

- Compare and contrast atomic bus transactions and non atomic bus transactions. What are the pros and cons of each of them?

- Assume individual requests take 5 clock cycles till the bus can receive the next request. What issue can arise from limiting number of outstanding requests to 8?

- Explain why it is important that the responses need not be in the same order as requests. What could be possible situations in which forcing order on responses causes delays?

- We have discussed a way of dealing with conflicting requests by not allowing them. What if the conflicting request is a read miss? That is, what if processor P1 wants to read a memory address x, but notices that P2 has a pending memory request for address x? Is there an optimization that could help us handle this case better?

Answers to question 3:

By allowing interleaved responses and requests, some quick requests can be served without waiting for slow requests issued before them.

For example, P1 requested A, which is in memory. Then P2 requested B, which is in P1's cache. If we force the order of response the same as requests, the second request will be served after P1 got response from memory. If we break the order restriction, the second request can be served without waiting for the previous request to memory. Thus the whole system's throughput will increase and even some requests' latency got reduced.

This is also the reason why we use non-atomic bus transactions.

This comment was marked helpful 0 times.