This lecture introduces a basic implementation of cache coherence. We have discussed cache coherence protocols before, but we only talked about very abstract protocols. We talked about how coherence protocols enabled the use of shared address space. We have described what messages and transactions needed to be sent and we have also assumed messages and transactions were atomic. In this lecture, we will discuss a basic implementation of our desired protocols in a real machine with a shared bus for interprocessor communication. The goals of our implementation is:

- Correctness. Correctly implemets cache coherence protocol and also adhere to specified consistency model.

- High performance

- Minimize cost. It can be minimum design time or minimum number of transistors.

Before we go into the details of the implementation, we first need to introduce three concepts: Deadlock, livelock and starvation.

Deadlock

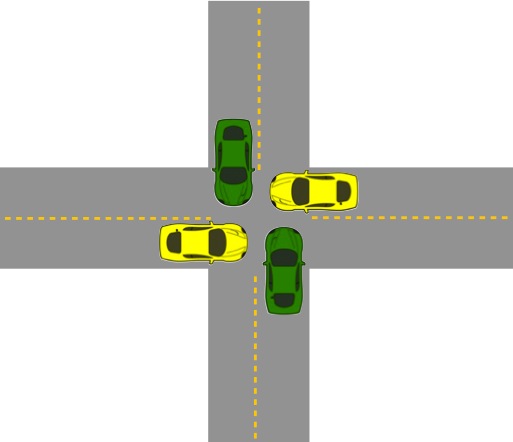

Deadlock is a state where a system has outstanding operations to complete, but no operation can make progress. Deadlock can arise when each operation has acquired a shared resource that another operation needs. As a result, no one can proceed since they are all waiting for a shared resource another process is holding. As illustrated in figure 1, each car represents a process. In order to cross the intersection, they need to obtain shared resources, free lanes. There is no way for them to make progress in this state unless someone backup.

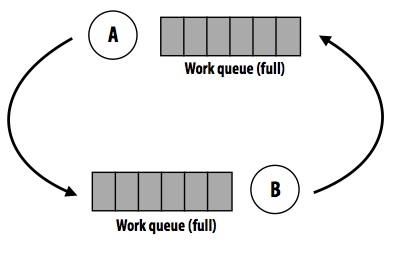

Figure 2 illustrates a typical deadlock in computer systems. When process A tries to put work into process B's work queue, process B tries to put work into process A's work queue and both work queues are full, no process can proceed since each process is waiting for empty room in the other process' work queue.

Figure 2 illustrates a typical deadlock in computer systems. When process A tries to put work into process B's work queue, process B tries to put work into process A's work queue and both work queues are full, no process can proceed since each process is waiting for empty room in the other process' work queue.

Livelock

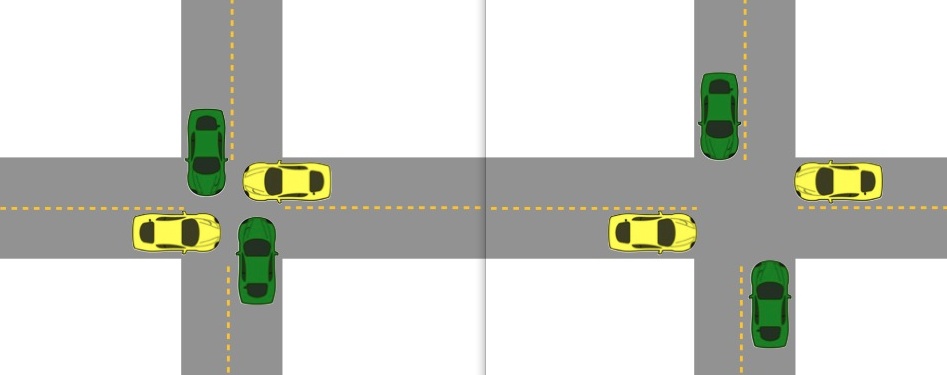

Livelock is similar to deadlock except that every process is doing useless work instead of waiting, as illustrated in the figure 2. Livelock is a state where a system is executing many transactions/ operations, but no thread is making meaningful progress. A typical example in computer system is operations continually abort and retry.

Starvation

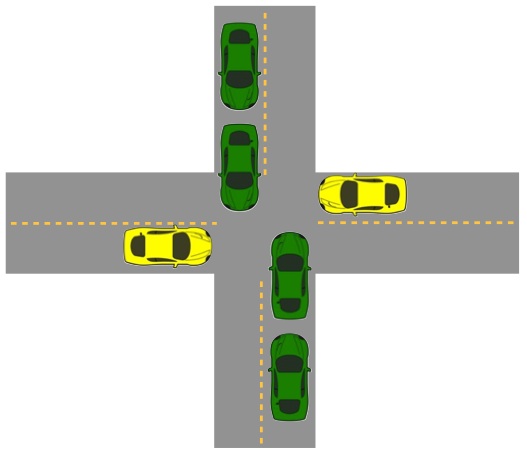

Stratvation is a state where a system is making overall progress, but some processes make no progress. As illustrated in figure 4, if yellow cars need to yield to green cars, yellow cars cannot make any progress unless all green cars have passed. Different from deadlock, starvation is usually not a permanent state. As soon as green cars pass, yellow cars can proceed.

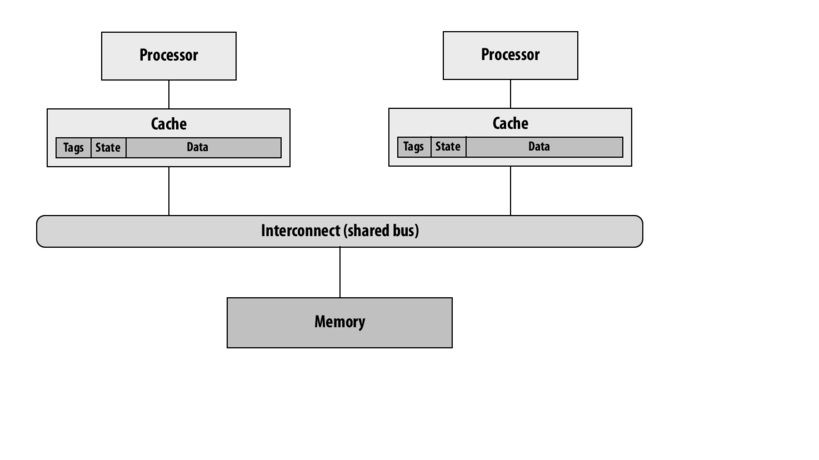

A Basic Implementation of Snooping:

Basic System Design:

In this basic implementation, each cache needs to deal with two tasks: one task is to handle the load and store request from its local processor, the other task is to broadcast the Coherence-related activity over the shared bus. So when the "cache miss" happens in this basic system, the cache will stall the processor, and do the corresponding operations on the multi-processor atomic bus. In more details, when performing BusRd or BusRdX operation, no other bus transactions are allowed between issuing address and receiving data, while performing BusWr operation, the target address and target data are sent simultaneously and received by memory before any other transactions are allowed.

In this basic implementation, each cache needs to deal with two tasks: one task is to handle the load and store request from its local processor, the other task is to broadcast the Coherence-related activity over the shared bus. So when the "cache miss" happens in this basic system, the cache will stall the processor, and do the corresponding operations on the multi-processor atomic bus. In more details, when performing BusRd or BusRdX operation, no other bus transactions are allowed between issuing address and receiving data, while performing BusWr operation, the target address and target data are sent simultaneously and received by memory before any other transactions are allowed.

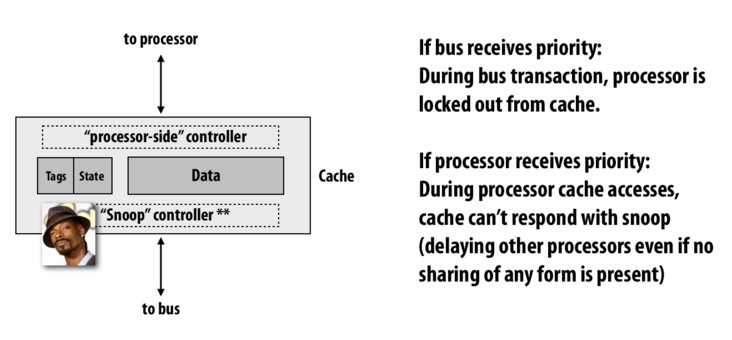

However, in terms of the two tasks describe above, the cache need to make response to two clients' requests (one is processor, the other is bus). Since both requests from processor and bus require tag lookup, if the bus gets the priority on cache, then during the bus transaction, processor is locked out from cache; otherwise, if the processor gets the priority, then during the processor cache access, cache cannot response with snooping.

So in this poor design, we will encounter a performance problem if one processor always sending coherence requests to the cache, then all the other processors will encounter cache miss problem. The bus needs to do frequent tag lookup in the cache, which will cause the cache are completely busy dealing with these coherence traffic on the bus. In this situation, other processor cannot even finish a load issue until all the other bus transactions are done. Therefore, the coherence traffic will kill the performance of the local processors. Conversely, if one local processor get the priority of the cache, then it can make its data do busy tag lookup and it will locked up other processors, in that case, this processor will hold up the rest of the system.

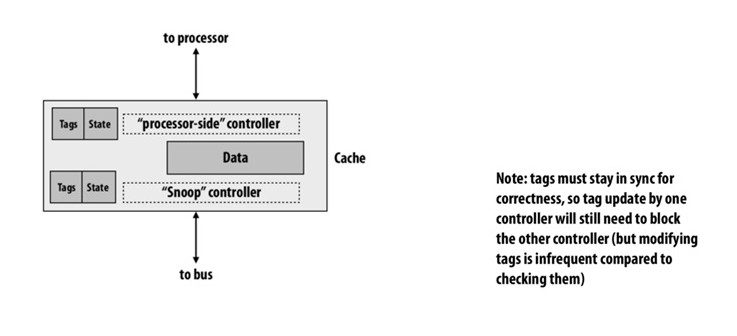

So there are two optional techniques to solve the challenge. One is using duplicate tags; the other is using multi-ported tag memory. These techniques allow both of the processor-side and snoop controller do access to the cache simultaneously.

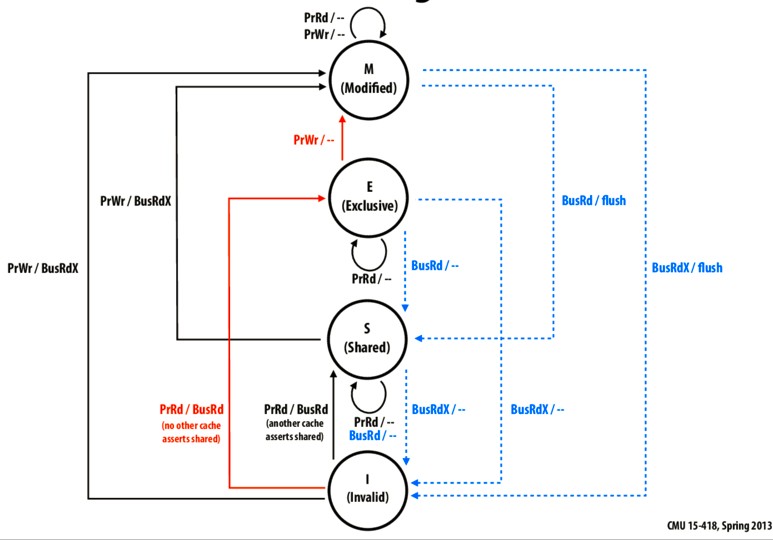

Reporting snoop results in MESI protocol

Now we consider how does MESI actually work.

Remember in MESI, whenever a transaction like read miss happens, all other processors have to respond. They have to tell the processor that just missed whether or not the value exists in their own caches because the processor that loading tha data needs to know whether it should load the data into E or S state.

One method to deal with this is such that for all other processors, if it holds the data, responds "nothing"; otherwise, responds "the data".

One problem of this method is how to determine when other processors respond and how much time we should wait. We can assert the each processors would respond in fix number of circles.

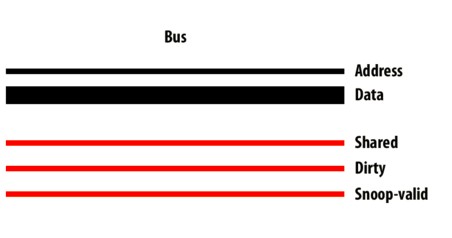

Now we add three new wires:

The shared wire is "OR" of shared state in all processors. A processor would set it to "1" if it holds the data and "0" otherwise.

The dirty wire is "OR" of dirty state in all processors. A processor would set it to "1" if the data in its cache is dirty and "0" otherwise.

The snoop-valid wire, better call it snoop-invalid wire, is "OR" of snoop-invalid state in all processors. A processor would turn it off after it complete the operation of snooping. If this wire is in "0" state, it indicates that the request is seen by all processors.

Then there are two ways to know whether the request has been done.

- Wait for fix number of circles. All processor must respond in certain number of circles and it is an upper bound of waiting time.

- Observe the snoop-valid wire. If it is set to "0", all processors have completed their operation.

Handling write backs

Write backs involve two bus transactions.

- Flush dirty data back to memory.

- Load new data into cache.

Then the processor would wait double time for one write and one read. Ideally, we really like the read to be as soon as possible and write later.

In modern systems, we first take the dirty line and flush it in a buffer, then we immediately load data, and later, the buffer would write to the memory as it is supposed to do.

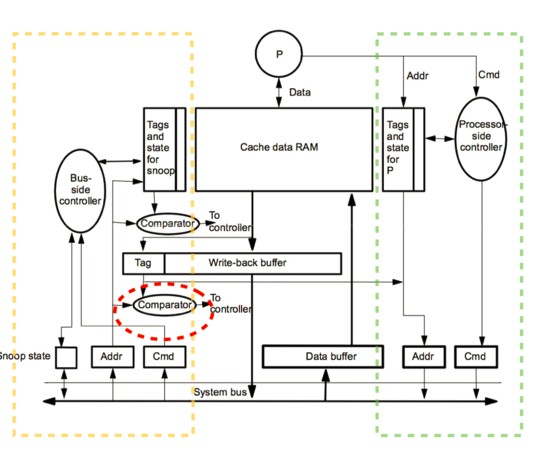

The yellow staff in the diagram are dealing requests from bus and the greens ones are dealing requests from processors.

Observe the yellow part: Addresses and commands are observed from bus, and cause tag checks. If cache controller needs to respond, it would do so.

Observe the green part: Processor issues load and store, and then checks the tags. If misses, its requests go out into address and command bus.

One possible problem is when we evict a dirty line, it is no longer in the cache. If some other processor askes for the data, normal thing is to check our cache, now it is not in the cache and the dirty line has not gone into memory. We need to fix this problem.

An easy way to do it add more logic to check whether the data is in buffer.

This check has to supply data, if the write-back buffer is hit, we should clear it.

So actions should be: 1. Hit the buffer 2. Observe data that needs to go to some processor 3. Send the data to another processor

Then, the data is not necessary to go to memory because it is in cache of other processor and is dirty there. We can skip write-back in this case.

Examples

- Example race condition

Here is an example. Suppose processor P1 and P2 suppose to write to the same cache line A simultaneously, and they are both in S state. Both processors want to write more or less at the same time. What's going to happen?

Suppose you allow P1 to go first then P1 "wins" bus access and sends BusUpg. What about P2? P2 is waiting for bus access and P2 must invaliate its line A when it receives BusUpg message. Now P2 does not have the cache line A since it is invaliated. Then P2 must change its pending BusUpg request to a BusRdx.

Cache must be able to handle requests while waiting to aquire bus AND be able to modify its own outstanding requests, otherwise there might be a deadlock. Here P1 has the bus resource and P2 needs the bus resource. P1 needs the resource in P2 to invaliate its cache line, but P2 has made a mistake and has changed its request state, so we have a situation that both processors are half-way through some transactions, and they need what the other processor has in order to make progress. It's just like an intersection example. This is an example of deadlock since things are not atomic.

- Fetch deadlock

Here is another example of deadlock.

P1 has a modifed copy of cache line B, but P1 has a request out to read a new cache line A. Now another processor needs B and BusRd for cache line B appears on bus while P1 is still waiting.

To avoid this deadlock, P1 must be able to serving incoming transactions while waiting to issue requests.

- Livelock

This is an example of livelock.

There are two processors writing to cache line B. P1 aquires the bus and it issues BusRdX. P2 throws its line away. But before P1 performs write, P2 aquires the bus and also issues BusRdX. Then P1 invalid its line. and so on.

How can we assure that this situation does not lead to a livelock? A write that obtains exclusive ownership must be allowed to complete berfore exclusive ownership is relinquished, that means, when you have exclusive ownership, before you ever invaliate it, you need to perform the write, otherwise livelock can happen. You have to take some steps to assure that when you start a transaction, you should complete it before you quit.

Questions:

1.How can we avoid starvation?

2.What is the performance problem in the basic poor design?

3.How to reduce waiting time for write backs?

4.Why are the examples above deadlock/livelock? What is the principle to avoid them?

This comment was marked helpful 0 times.