Note: Due to the size and complexity of the lecture, we only cover Data Versioning and Conflict Detection.

Motivation for Transactional Memory

Mutual exclusion via locks has been the traditional solution to manage shared state in a multithreaded process. Using locks presents programmers with a tradeoff between simplicity of code and performance. For example, a concurrent linked list implementation using a single global lock is simple to implement but yields poor performance. Fine-grained locking, like hand-over-hand locking, is much more difficult to implement correctly but can potentially have better performance. Using locks requires strict programming discipline in order to avoid deadlock, livelock, and starvation.

Transactional memory tries to address the above problem by providing a simple atomic{} construct. The construct is used to provide atomicity, isolation and serializability while hiding the implementation details from the programmer. A goal of using transactional memory is to provide most of the performance benefit with least effort. In the following text we present a few basic concepts used to implement transactional memory.

A more detailed discussion on transactional memory can be found here.

Data Versioning

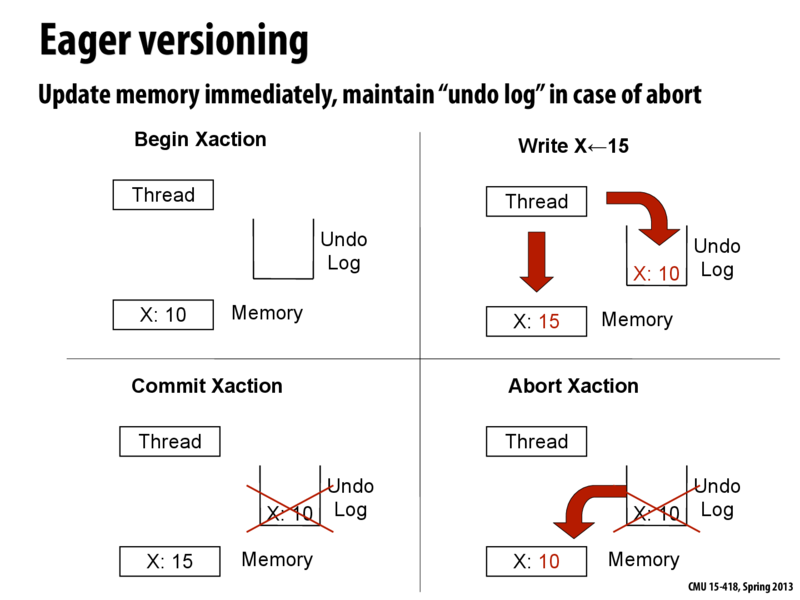

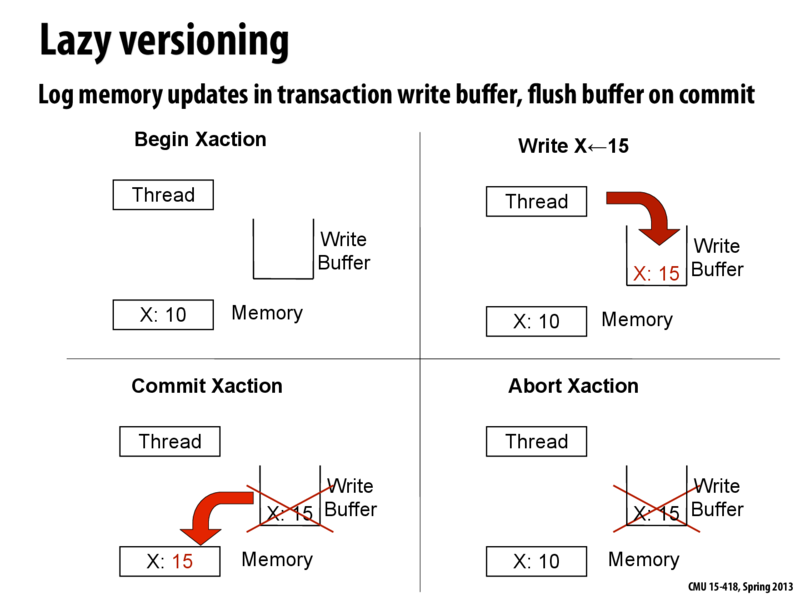

Data versioning allows you to keep track of old and new data in concurrent transactions. Keeping track of these versions allows committing or aborting a transaction. We talk about two approaches - "eager" versioning and "lazy" versioning.

Eager versioning just goes ahead and updates memory directly whenever it wants to, but keeps track of all of its changes in an "undo log" that works kind of like a stack. Every time memory is modified, the old values are stored in the stack. This way, if a conflict arises during the transaction, then the transaction can be aborted with each step of the undo log being performed in reverse order to restore the original state of memory as if the transaction had never begun.

With lazy versioning, any data to be written is first updated to a buffer in cache instead of directly writing to memory. In case of a successful commit, it is only at the end of the transaction that memory is updated. If the commit fails due to a conflict, the buffer is discarded and the transaction does not modify memory at all.

| Lazy | Eager |

|---|---|

| + Each store requires only one write to buffer. | - Each store requires a write to memory as well as a write to the undo log. |

| - Commits may be slower as it requires copying data from buffer to memory. | + Commits are faster since edited data is already stored in memory. |

| + Rollback is faster as it just requires dropping data from the buffer. | - Rollback is slower as old data has to be copied from the undo log. |

| + Handle faults in a better way as the memory is not left in an inconsistent state on crash | - Memory would be in an inconsistent state in case of a crash in middle of a transaction |

Conflict Detection

Conflicts during transactions must be recognized and handled properly to ensure correctness. For instance, if one transaction is attempting to write a value at an address but another transaction is trying to read or write that same address at the same time, we need to determine who gets to access it first. The system keeps track of each transaction's "read set" and "write set," which are the addresses read from or written to in each transaction, in order to detect conflicts and handle them appropriately. We talk about "pessimistic" detection and "optimistic" detection.

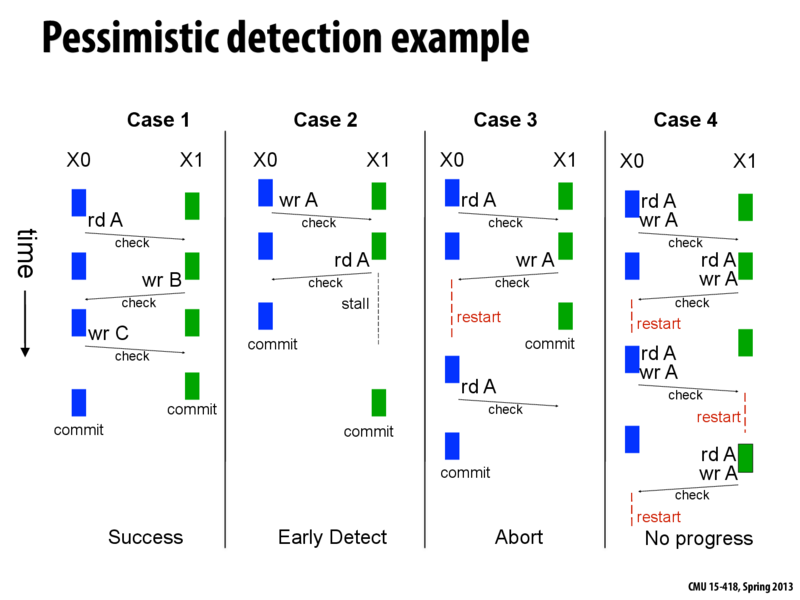

Pessimistic detection tries to detect conflicts early, as soon as a load or store is requested, as if pessimistic of the outcome. If it detects a conflict, then the contention manager will decide to either abort the transaction, or stall one of the transactions until the other completes. The contention manager decides which transaction gets priority through various policies; more details on the contention manager are below.

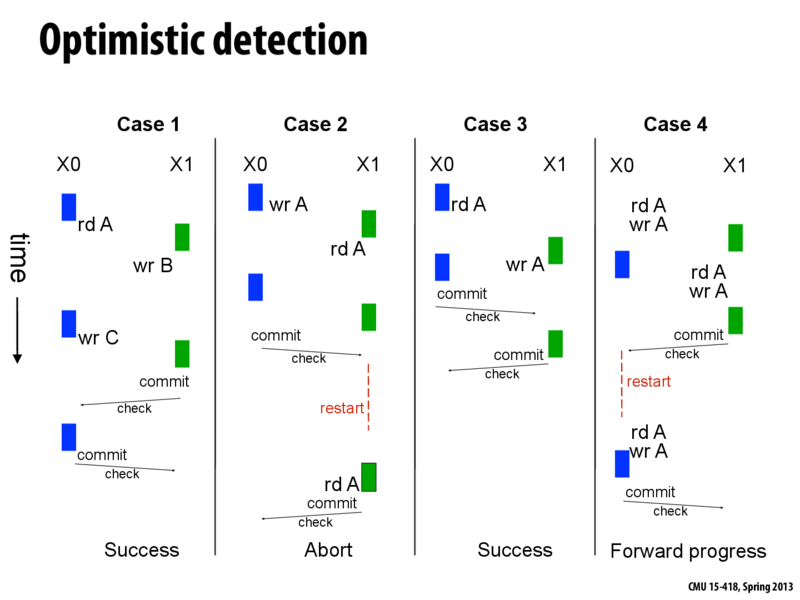

Optimistic detection tries to detect conflicts only at commit time. Thus, no information is required to be communicated on a per access basis. Before commiting, the "write set" is communicated to all other transactions check for conflicts.

In Case 1, both transactions X0 and X1 can run perfectly concurrently since there are no conflicts. Optimistic detection will likely to perform slightly better as there are fewer checks.

Pessimistic detection would be advantageous in Case 2. This is because when using optimistic detection, since we abort, we must redo part of the work in X1 whereas in pessimistic, a stall requires no such duplication of work (no restart).

An advantage of optimistic detection can be seen in Case 3. Both transactions X0 and X1 can successfully commit as conflicts are only detected at commit time. However, if pessimistic detection was used, X0 would have been restarted after the write to A in X1. Optimistic detection thus allows for more serializable schedules.

In case of a write-write conflict, as in Case 4, pessimistic detection may lead to a livelock. The trasaction which commits first succeeds in the case of optimistic detection, so there is no livelock.

Contention Manager

Note: This section is not covered in the slides, but may help you better understand how conflict detection actually works.

Conflict detection is managed by the contention manager, whose job it is to make transactions look as if they are sequentially executed. As long as the results of transations are the same as those of any possible sequential execution, the execution order can be allowed.

In each conflict, there are two sides: the attacker and the defender. The attacker is the transaction requesting access to a shared memory location. In pessimistic conflict detection, the attacker is the transaction issuing the load or store from an address that conflicts with another transaction currently using that address. In optimistic, the attacker is the transaction attempting to commit.

There are many ways to handle a conflict. The contention manager can choose to abort or stall either the attacker or defender, or do nothing at all. Different policies make different decisions. The Aggressive policy always immediately restarts either the attacker or the defender. The Polite policy guarantees transaction success after some restarts. The Randomized policy just randomly chooses either the attacker or defender to abort. Some policies use different heuristics, such as which transaction has completed more work, to make wiser decisions.

Instead of aborting, stalling can be more reasonable since it saves work of transactions, as seen in Case 2 of the pessimistic detection examples. Unfortunately, a scheme which always stalls can easily lead to deadlock: T0 writes X, T1 writes Y, T0 tries to read Y, stalls on T1, while T1 tries to read X, and stalls on T0 (similar to Case 2). To make stalling practical, deadlock avoidance techniques are needed. One such technique is called the Greedy algorithm which uses two rules to avoid deadlock:

- If T1 has lower priority than T0 or if T1 is waiting for another transaction, then T1 aborts when conflicting with T0.

- If T1 has higher priority than T0 and is not waiting, then T0 waits until T1 commits, aborts, or starts waiting (in which case the first rule is applied).

One important thing to mention is that conflicts don't necessarily lead to invalid execution order. Take Case 3 in figures given above as an example. As long as X0 never reads A again, both transactions could be allowed, since the result may just be the same as if X1 starts after X0 finishes (such as how the optimistic contention manager handled the conflict). However, if the pessimistic contention manager doesn't abort either transaction, it needs to keep more states associated with the transactions, which may increases the design complexity of the contention manager. Thus, when making decisions, contention manager needs to consider the trade-off between efficiency and concurrency.

Questions

In an optimistic detection transaction where the committer always wins, an "attacker" transaction that wants to commit may have to read some value of another "defender" transaction that hasn't been committed. If the transaction to commit always wins the conflict, how can the commit yield the correct answer?

Is it possible for an eager transaction with pessimistic conflict detection using stalls instead of aborts to deadlock?

For the three following scenarios, which data versioning (lazy or eager) would you expect to be faster?

- Storing data

- Committing

- Aborting (Rolling back)

Storing data: eager. This comment was marked helpful 0 times.

Committing: eager.

Aborting: lazy.

Why is it the case that if two transactions using pessimistic conflict detection with eager data versioning are attempting to undo at the same time, their writes to memory from their respective undo logs don't conflict with each other?

With pessimistic conflict detection, one of the two transactions will detect write conflict before it can do the write. Thus, there is no chance that two transactions both have the undo logs for the same memory. And then the undo log will not conflict with each other. This comment was marked helpful 0 times.

The "contention manager" is responsible to decide using stalls or aborts, so deadlock could be avoided in this way.

This comment was marked helpful 0 times.