Note:Lecture 5's scope is too large to cover in an article of a reasonable length, so we're going to cover a subset of its topics: how the abstractions provided by CUDA map down to its implementation on graphics hardware.

Host vs. Device Execution

CUDA differentiates between the host, the computer's CPU and main memory and device, the GPU. In CUDA programs, functions specify both on which device they are run and on which devices they are callable from. Functions marked __global__ execute on the device and are callable from both the host, and on newer GPUs (compute capability 3.x+), the device as well. These functions are known as kernels. Functions marked __device__ execute on the device and are only callable from the device. Functions either marked __host__ or unmarked execute on the host and are only callable from the host.

Implementation

Code run on the device is compiled into an intermediate bytecode format. When a kernel is run for the first time, the CUDA runtime compiles it to its machine code appropriate for the specific GPU and transfers the program onto the device.

Thread Hierarchy

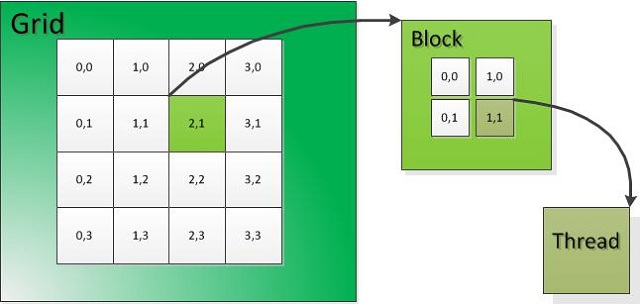

The basic unit of execution in CUDA is the thread. Threads are organized into blocks which are themselves organized into a grid.

The dimensions of a block and the grid are specified at kernel launch time:

dim3 blockDim(16, 16);

dim3 gridDim(10, 10, 10);

my_kernel<<<gridDim, blockDim>>>();

Grids and blocks can be one-, two- or three-dimensional. In the example above, each block consists of 16 * 16 = 256 threads and the grid consists of 10 * 10 * 10 = 1000 blocks. CUDA will then launch and manage 256 * 1000 = 256,000 threads for us.

Threads are given access to their position as well as the grid and block layout through four built-in variables: threadIdx, blockIdx, blockDim and gridDim. Continuing the example above, if we had

__global__ void my_kernel() {

printf("I am at position (%d, %d) in the block at position (%d, %d, %d)\n"

"My global position in the grid is (%d, %d, %d)\n",

threadIdx.x, threadIdx.y,

blockIdx.x, blockIdx.y, blockIdx.z,

blockIdx.x * blockDim.x + threadIdx.x,

blockIdx.y * blockDim.y + threadIdx.y,

blockIdx.z * blockDim.z + threadIdx.z);

}

we would see a lot of output (512,000 lines!) looking like

I am at position (5, 7) in the block at position (5, 8, 3)

My global position in the grid is (85, 135, 3)

...

I am at position (0, 0) in the block at position (0, 0, 0)

My global position in the grid is (0, 0, 0)

...

I am at position (10, 0) in the block at position (9, 9, 0)

My global position in the grid is (154, 144, 0)

Note that both the execution order of threads within a block as well as blocks within a grid is undefined. However, all threads in a block will be scheduled at once.

Implementation

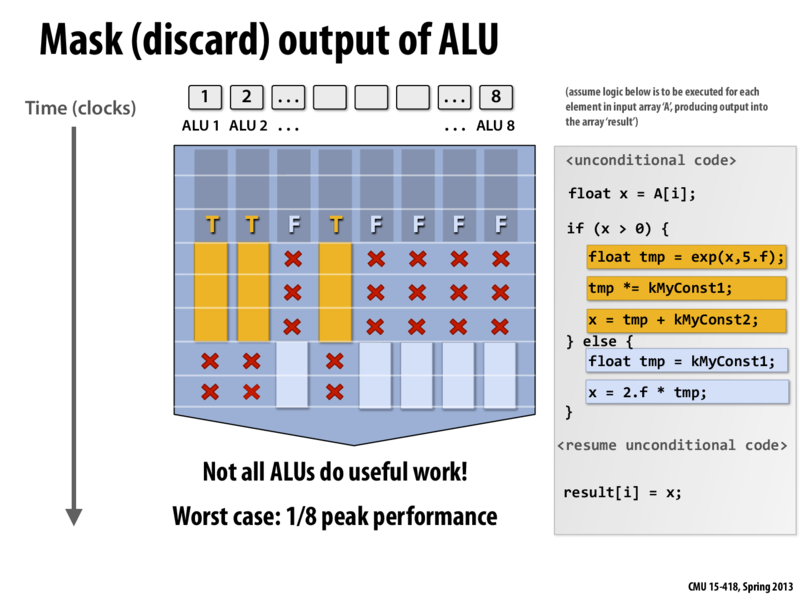

In an NVIDIA GPU, the basic unit of execution is the warp. A warp is a collection of threads, 32 in current implementations. A CUDA warp is similar to an ISPC gang; all threads in a warp execute the same instructions on different piece of data. Warp divergence is handled similarly to ISPC gang divergence. All threads in the warp execute every instruction of branches that any of them take.

Due to this fact, warps are not an implementation detail hidden from the CUDA programmer. The built-in variable warpSize specifies the number of threads per warp, and the allocation of threads to warps is well-defined. In addition, there are several CUDA functions that explicitly deal with communication between threads in a warp. Warp shuffle functions like __shfl allow warps to exchange data and warp vote functions like __all allow warps to branch together based on per-thread data.

GPUs consist of a number of Streaming Multiprocessors, or SMs. Each SM has a set of execution units, a set of registers and a chunk of shared memory. When a CUDA kernel is executed, the runtime will allocate warps and blocks to SMs according to their register and shared memory needs respectively.

The execution contexts of all warps on an SM are stored on the SM at all times. This makes context switches between warps essentially free. Every clock cycle, the SM's scheduler picks warps which are not blocked and executes a single instruction. Each SM can run several warps at once on separate execution units. This arrangement can be thought of as an extreme form of Hyper-Threading.

While current CUDA implementations will schedule every thread in a given block on the same SM at the same time, this is not a guarantee provided by CUDA. If future implementations provide fast shared memory between cores, threads in a block could be scheduled on separate SMs.

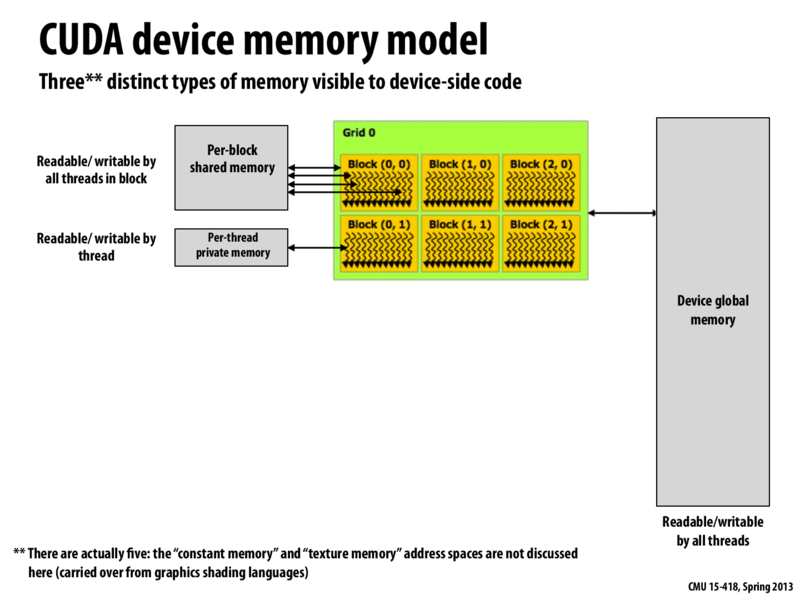

Memory

CUDA differentiates between several generic types of memory on the GPU: local, shared and global. Local memory is private to a single thread, shared memory is private to a block and global memory is accessible to all threads. This memory is similar to main memory on a CPU: a big buffer of data.

In addition, CUDA provides two more specialized types of memory: constant and texture. Constant memory can be used to store immutable data visible to all threads. Textures provide access to arrays with specialized input and output formats. Texture arrays can be accessed with both normal integral coordinates and normalized floating point coordinates. CUDA will interpolate between nearby array entries when returning the value, which can be either in the array's native format or as a normalized floating point number.

CUDA provides functions to allocate global memory buffers. One such function of interest is cudaMallocPitch, designed for allocating two-dimensional arrays. It guarantees that each row of the array will be properly aligned to ensure efficient access.

Implementation

GPUs have a typical memory hierarchy. Thread local memory is located on-chip and is extremely fast. Shared memory is larger but slightly slower and global memory is much larger but even slower. Modern GPUs provide an on-chip global memory cache. Interestingly, both shared memory and the global memory cache use the same on-chip storage location. The amount of memory reserved for each application is configurable on a per-kernel basis.

The GPU has infrastructure dedicated to textures. Floating point conversions, normalization and filtering are all handled by customized hardware and can be significantly faster than performing the same operation manually. In addition, texture memory is aggressively cached and not kept coherent with global texture memory during the execution of a kernel.

The GPU can coalesce concurrent reads by threads in a warp into transactions of 32, 64 or 128 bytes. However, these accesses must be aligned to their size. A read from a misaligned set of addresses must be split into multiple transactions, significantly impacting the efficiency of the program.

Barriers and Synchronization

CUDA's basic method of synchronization is __syncthreads(). A thread calling __syncthreads() will block until

- Every other thread in that thread's block has also called

__syncthreads(). - Every access of global and shared memory before the call to

__syncthreads()by every thread in the block has been made visible to every other thread in the block.

The host can syncronize with kernels via cuda*Synchronize() functions. For example, cudaDeviceSynchronize() blocks the host until all preceding CUDA commands have completed.

CUDA also has a set of functions providing atomic updates of shared and global memory. For example, atomicAdd(n, v) atomically adds v to the number stored at n and returns *n's old value.

You may note that CUDA does not provide any functions allowing global synchronization over the entire grid on the device. This is intentional. When the CUDA runtime places a warp on a SM, that warp remains on that SM until it finishes executing. Imagine that such a function, __crazy_global_syncthreads() existed. Consider the following program:

__global__ void my_kernel() {

// stuff

__crazy_global_syncthreads();

// stuff

}

int main() {

dim3 blockSize(32, 32);

dim3 gridSize(100, 100, 100);

my_kernel<<<gridDim, blockDim>>>();

}

This will create way more threads than the GPU can run concurrently. Eventually, every scheduled thread will block in __crazy_global_syncthreads(). Then we're stuck, since the rest of the threads in the program will never run! This is one situation where the hardware implementation of the GPU significantly affects the type of algorithms that can be implemented with CUDA.

References

The CUDA C Programming Guide has detailed descriptions of both CUDA's abstractions and the hardware implementation underlying them.

Questions

- Shared memory is located on each chip and it is possible to configure it to be used as a cache. Many of the examples visited in the slides demonstrated moving data from global memory to local memory before use. In what situations would it be better to explicitly move data than to rely on caching?

- Shared memory is arranged in banks, such that every 32nd or 64th word is in the same bank. As long as different words in the same bank aren't accessed simultaneously, the loads can be performed simultaneously. Why might this have been chosen? What access patterns does it favor?

- Given that CUDA assumes the order of execution of the blocks is insignificant in what ways might it be possible to ensure work is assigned in a manner that doesn't result in many idle threads?

- Why might CUDA designers have chosen to allow data sharing between blocks, rather than warps? What trade offs are there for this decision in terms of latency hiding and resource availability?