This explanation covers slides 22 onward.

Motivation

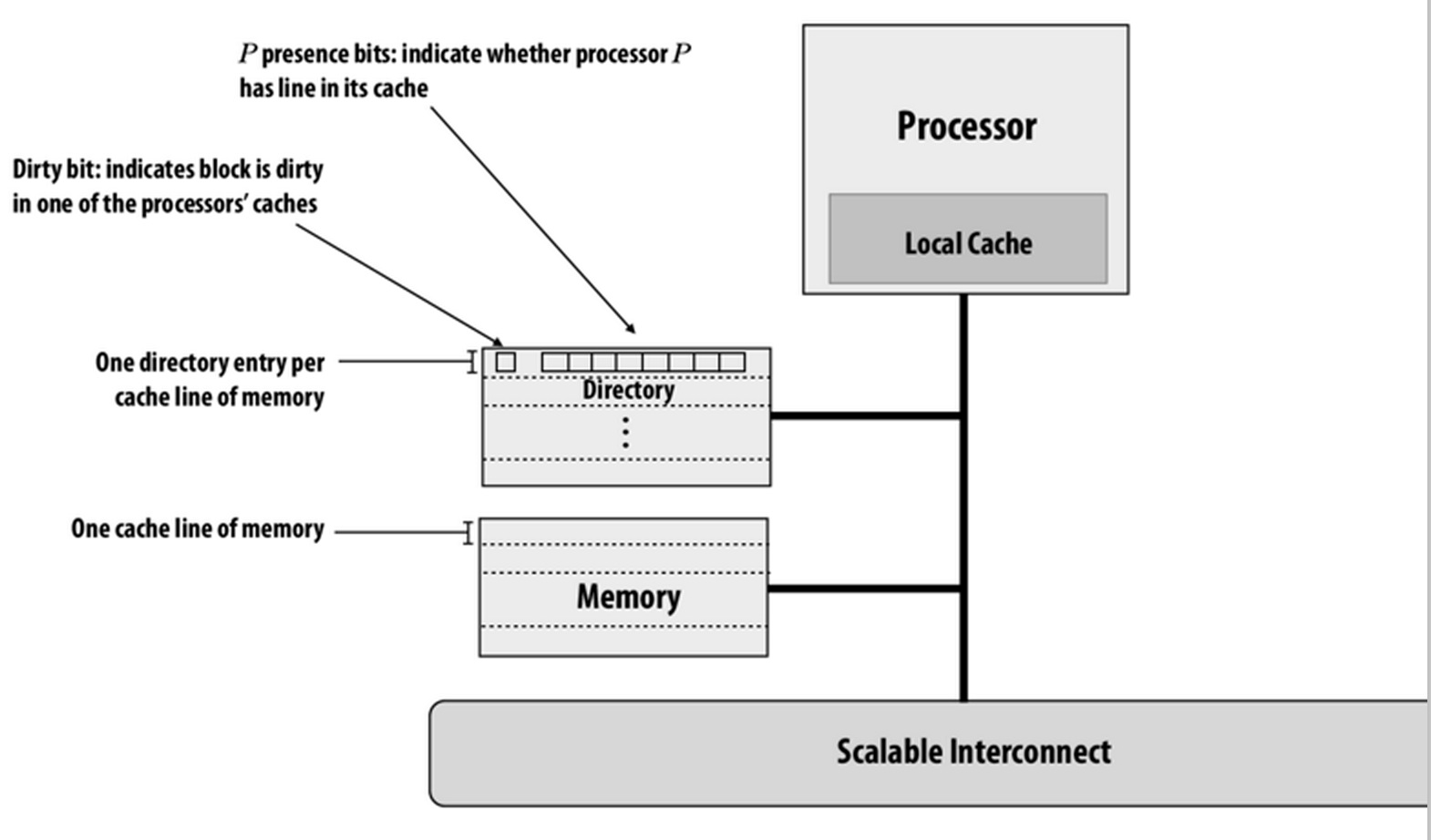

The directory-based cache coherence protocol is a scalable approach compared with snooping-based protocol. It avoids broadcasts by storing information about the status of the cache line in a directory and use point-to-point message communication. A simple directory overview is described in figure 1. However, the naive implementation of directory-based cache coherence has much storage overhead, which limits its performance.

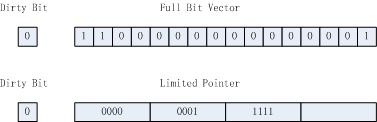

In the naive implementation (full bit vector), there is a partition directory for each node's local memory. Each direction entry stores information for each memory block. In each directory entry, there is a dirty bit and a full bit vector. The dirty bit indicates whether the block is dirty in the current owner processor's cache, and the Pth bit in the bit vector indicate whether processor P is a sharer of the block(having this block in its cache).

Without considering the size of the dirty bit or other extra data, the size of directory entry is P, each bit for one node. The number of entries equals to the number of blocks in memory, M. So in full bit vector design, the directory itself consumes P*M bits. Considering a system with 64 byte cache line, if it has 64 nodes, then a directory entry is 64 bit large, and the overhead is 12%. If it has 1024 nodes, the overhead is 200%. We can find that the full bit vector approach is not scalable in term of memory overhead. It can be rather space-consuming. However it indicates how we can improve the method, that is, to reduce P*M. Here we will talk about these issues and explain some optimization approaches.

General Possible Solutions

To reduce the storage overhead P*M, we can either reduce P or reduce M. Some direct solutions may come into your mind.

- Increase cache block size

Large cache block size leads to small number of blocks, which reduces M term. But it will increase the traffic cost of cache line replacement and may increase the possibility of cache misses due to false-sharing. - Represent multiple processors in one bit

Aggregate processors into several groups, and let presence bit indicate whether there is any processor in one group holding the data, which reduces P term. Within one group, snooping-based protocol can be used. But this approach generates more artifactual traffic because of the additional hierarchy.

These general solutions are just based on the theoretical analysis of the space overhead. However if we look further into the normal workload. we can come up with some other optimization solutions, such as limited pointer schemes and sparse directories. We will talk about each solution in detail then.

Limited Pointer Scheme

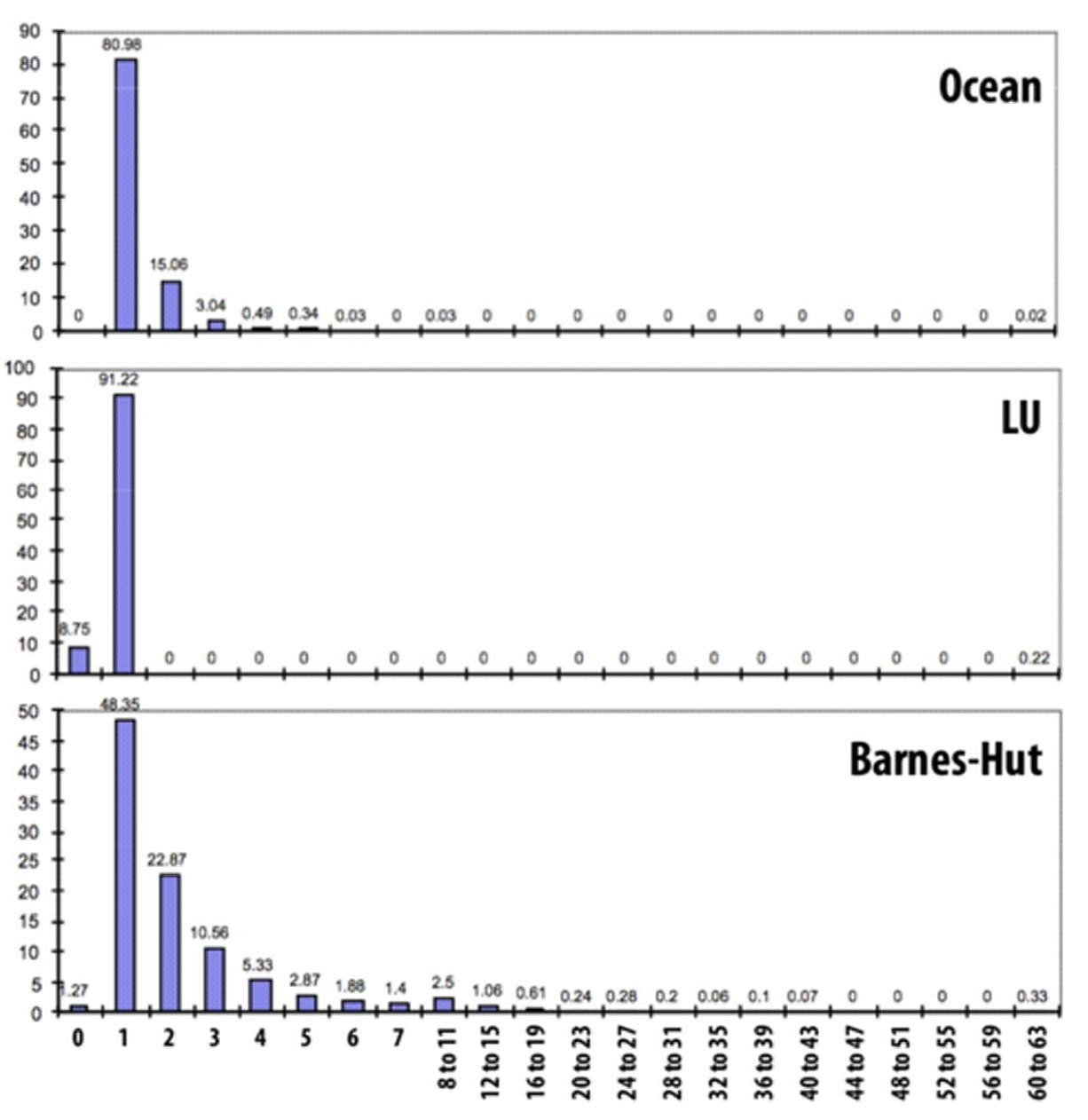

The limited pointer scheme focuses on reducing the P parameter. It is based on the following observation: data is expected to only be in a few caches at one time. Through the observation of several real workload, From histogram of Ocean below, LU and Barnes-Hut, 97% of cache lines are shared by no more than 10 nodes at the same time. So a limited amount of pointers should be sufficient, instead of a complete bitmap.

In the limited pointer scheme, each directory entry stores a certain number of pointers. The pointer is a node ID, indicating that the node has the block in its cache. If the node number is 1024, then we can use a 10 bit pointer. The scheme can be illustrated in the following figure.

This scheme reduces the cost of space greatly. For example, If we use 10 pointers in each entry, in the case of 1024 nodes, the size of a directory entry is 100 bits, which is approximately 10% overhead.

However, you may find a problem: if the same cache line is shared by more than 10 nodes, the scheme will crash. Several possible approaches can manage the overflow as follows:

- Broadcast

If the slots run out in a directory entry, just revert to the basic snooping method to handle the request of the given address. - Kick out

If a new When overflow, kick an old sharer out. Keep the number of sharers no more than that of slots for pointers. - Coarse vector

As mentioned in the general solution part 2, one bit stands for a group of nodes. Thus, overflow sharer will be aggregated to a group. - Dynamic pointers

Maintain a pool of pointers and manage allocation of pointers to directory lines by hardware

We may find that all these methods will introduce some additional manage overhead. This is a typical optimization approach, optimizing the common case in the best effort while handling uncommon case with minimal overcost. In this case, it just sacrifices the performance in some uncommon case to achieve a better overall space overhead.

Sparse Directories Scheme

The sparse directories scheme is based on the following observation: Only a few memory can be resident in cache. Cache is much smaller than main memory anyway. Given that coherence protocol only needs sharing information for lines in the cache, most directory entries are useless at all time. For example, 1MB cache with 1GB main memory indicates 99.9% waste of directory space.

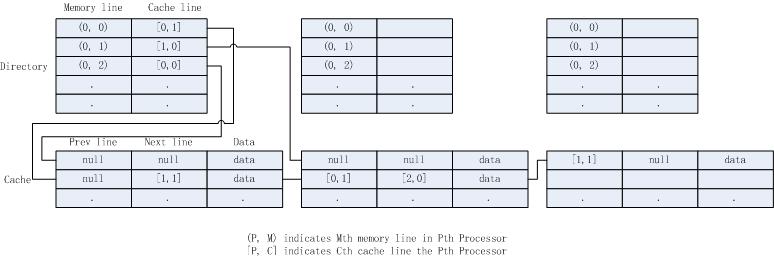

The basic idea of the method is to minimum the overhead of the empty entries in the directory. A typical way to achieve this is to leverage the link list structure. For details, we have a linked list for each memory block. All cache lines of the specified block, probably in different nodes, are organized as linked list through an additional previous/next pointer in the cache line. To find the head of each list, we can have a single head pointer per line in memory. The below graph is a good example for it.

Here in the graph, we use a M*1 directory to find the list head. A more practical alternative would be to simply maintain a small lookup table storing the head of the list for cached lines. A more detailed description can be found in the discussion here. The sparse directory scheme can reduce the space overhead dramatically. We have some additional pointers in each node's cache, of which the size is proportional to the cache size. And we have a small lookup table in memory. However when writing, we have to iterate through the list, which make the latency proportional to the number of sharers. Moreover, it implies higher implementation complexity.

Questions

- We discussed workload driven performance evaluation. Under which workload will snooping cache coherence work better than directory-based cache coherence, even in a large cluster?

- Limited pointer schemes are a great example of optimizing for the common case. Would you come up with another example of such a method?

- Describe several ways to reduce the storage overhead of directory-based cache coherence. What is the effect of these approaches on the performance?

- Are limited pointer schemes and sparse directories helpful for Intel desktop processors? How about for Blacklight?