Overview

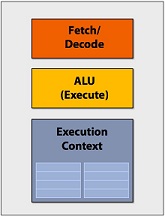

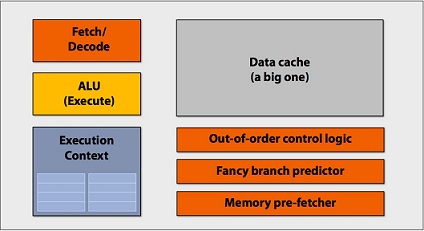

This lecture first introduced the basic structure of a simple single-core processor. This processor, shown in figure 1, contains a fetch/decode unit, an ALU, and some context and registers. The lecture focused on 3 ways that processors can exhibit parallelism. First, they can have multiple cores, allowing the processor to execute different instructions on different data simultaneously. Second, a core can contain multiple ALUs, allowing it to execute the same instruction stream on multiple pieces of data at the same time. This is called SIMD processing. For SIMD to be effective, the same code should be run on a lot of data; this is called coherent code. Finally, the processor could store multiple contexts on-chip, allowing it to context switch between different thread contexts.

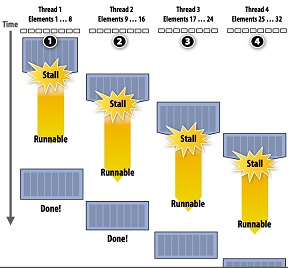

The second half of the lecture focused on memory. Memory latency is how long it takes to fetch memory, and memory bandwidth is the speed at which memory operations can be performed. Memory operations can take 100s of clock cycles, so it is important to find ways to keep this from slowing down programs. One helpful technique is to use multi-threaded processors, to run a runnable thread while another is waiting for a memory operation. This strategy hides latency. Caches also address this problem, this time by decreasing latency. Finally, the lecture discussed specific processors, the type of hardware they use for parallelism, and the trade offs they make in terms of memory latency and bandwidth.

Parallelism

Suppose we have a basic pretend processor that can only execute one instruction per cycle. The processor has three parts: Fetch/Decode, an arithmetic logic unit (ALU), and Execution Context.

- The Fetch/Decode loads the next instruction from memory.

- The ALU executes the instruction.

- The Execution Context contains the state of the code, such as registers.

A drawback of our current processor is that it executes every instruction sequentially. However it is possible that there are multiple instructions that are independent. You could potentially execute these instructions in parallel. If we change our processor to be a superscalar processor, we can perform multiple instructions per clock using multiple ALUs and obtain the correct answer. Performing multiple instructions in parallel is known as instruction level parallelism (ILP) and is used by superscalar processors.

This processor, as shown in figure 2, has a single core with the basic units that we discussed earlier and additional units, as shown in the image, that try to increase performance. However, if we remove these parts, we can use the extra space to add an additional core to our processor. This will make the processor less sophisticated, but now we have multiple cores so we can perform tasks in parallel. While each core may run slower than our previous core with extra speedup stuff, we have two cores that can run simultaneously, so we are able to run just as fast, if not faster, as the previous core. To take advantage of multiple cores, we need to find independent instruction streams. It is extremely difficult for a compiler to determine which streams are independent, so certain 'parallel' languages have constructs like 'forall' to imply that the loop iterations are independent, which lets the compiler schedule them in any order.

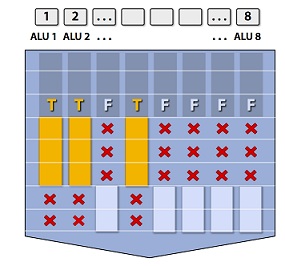

Now consider when we loop through the numbers from 1 to 100 and compute the square of every number. The body of the loop contains the same instruction for every iteration. In our current processor, we will have to fetch the same instruction 100 times to execute this loop. If we know we're going to keep using the same instruction, we could save time by executing multiple rounds of the loop simultaneously. This is known as single instruction, multiple data (SIMD). Using SIMD processing, we can load the instruction onto all of the ALUs and then execute multiple rounds of the loop in parallel on all ALUs. SIMD saves the data in a vector array of floats and executes the instructions on the vector itself. However if the body varies in some loops, you can still get parallelism using multiple cores, but this may impair vector instructions. For example, suppose you have a conditional in a for loop. Each iteration of the loop might not take the same branch in the conditional.

Conditional execution can have very poor performance. Different branches will execute for different threads and we will always perform computations that will get disregarded.

There are a couple of useful terms you should be aware of.

- Coherent execution: What happens when many of the instructions are similar. This is when it's beneficial to use SIMD as we only need to fetch the instructions once.

- Divergent execution: The opposite of coherent execution, meaning the instructions are not similar. When different program instances will need to execute different instructions. This can occur if different instances take different branches of a conditional or if different instances take more iterations of a loop than others. SIMD is not useful for divergent execution since the instructions between instances are likely to differ so we cannot optimize by having each lane execute the same instruction simultaneously.

- Explicit SIMD: Parallelization that occurs at compile time. For example if the programmer uses terms like "forall", the compiler knows that SIMD can be used to optimize the loop.

Memory

Before we discuss memory, there are a couple terms we need to be familiar with.

- Memory latency: The time taken for a memory request to be completed. This usually takes 100s of cycles.

- Memory bandwidth: The rate at which the memory system can provide data to a processor.

- Stalling: Occurs when a processor cannot continue to execute code because of a dependency on a previous instruction. To continue with the current instruction, the processor must wait until the previous instruction is completed. Stalling can occur when we have to load memory.

Memory latency increases the time taken to execute the program. We would like to try to reduce the effect of memory latency. One way to reduce latency is to use a cache. Not only do caches reduce memory latency, they provide a high bandwidth (lecture 2, slide 41). However if data is not stored in the cache, we still have to retrieve it from memory, so we still have a latency problem. We can deal with this problem using prefetching. If we can predict ahead of time that the program will ask for a piece of memory, we can prefetch the data, which will hide latency (note: This is different than reducing latency. Fetching the data will still take the same amount of latency).

While caching and prefetching can help with stalling, there are times we will have to deal with stalling. When a process stalls, it cannot continue with the current instruction. However it is possible there are other parts of the code in another thread we can execute while we wait for the data required to complete the current instruction. So we can reduce stalling using multi-threading, which is when we run a different thread while the old thread waits for its data. This is how we can hide latency within the program. By running other threads, we are hiding the fact that we are losding infomation for the the thread that needs the data

One downfall is we decrease throughput as multi-threading increases the time for an individual thread to finish an assigned task. However, the time to complete all the tasks should decrease, since we are almost always doing work. Multi-threading also requires a lot of memory bandwidth because more threads usually means we will have need of more data. We only have a limited amount of space in the cache, so if we have more data, we will have less cache space to store data for each thread. This means we will probably have to fetch data from memory more often. However we can hide this latency using the methods discussed earlier. We can use a GPU instead of a CPU if we wanted our processor to focus more on throughput. A CPU has a big cache and few threads. A GPU, on the other hand, has a smaller cache, more threads, and larger bandwidth. Even though the GPU has a smaller cache, it is able to hide memory latency using multi-threading.. As long as our bandwidth can load the data and we are able to keep the threads busy, we can hide stalls.

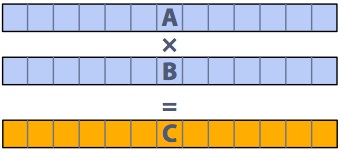

Say we want to multiply two vectors A and B, both of which contain millions of elements. We will perform this on a NVIDIA GTX 480 GPU that can do 480 MULs per clock (1.4 GH) that can do three memory operations (12 bytes) for every MUL. One would think this task would be highly parallelizable since each multiplication is independent. However, the task is limited by bandwidth. The time to perform the multiplications requires way less time than fetching the data. In order to keep the threads busy, we would need about 8.4 TB/sec of bandwidth. However, while this is not optimal, using a GPU is still 8x faster than a CPU. Therefore for a parallel program to be efficiency utilize the resources in a GPU, the ratio between memory fetches and computing needs to have the right balance.

Exercises:

What are the costs and benefits of adding the following to a chip? For each option, give one a code example which would benefit the most from that option.

- Cores

- ALUs

- Thread contexts

Traditionally (e.g. in 15-410), thread context switching was implemented by saving the switched-from thread's state (e.g. registers) in kernel memory, and restoring the switched-to thread's state from memory. How is context switching different with hardware support for threads? What can we do with hardware support that we couldn't do without it?

- Explain how the hardware, the OS, the compiler, and user code all contribute to using parallel resources effectively. Which layer is responsible for which decisions?

- Clearly explain the difference between latency and bandwidth.

snkulkar

snkulkarConsider the amount of time it takes for 100 cars to get from Pittsburgh to Philadelphia. At some point in the route, the 4 lane highway goes down to a 2 lane highway. The latency is the amount of time it takes for one car to get from Pittsburgh to Philadelphia, while the bandwidth is the number of lanes of the road.

Memory latency is defined by the amount of time it takes for a memory operation to complete, while bandwidth is defined by the rate at which the data is provided to the processor.

This comment was marked helpful 0 times.

SIMD would be even slower when doing divergent execution since it needs to run both branching path and mask the results

This comment was marked helpful 0 times.

Does masking actually degrade performance? I'm not sure I see why this would necessarily be the case.

This comment was marked helpful 0 times.