Let's start by defining these two terms:

Abstraction: In many languages, abstraction usually involves some sort of interface for a programmer by using an API or library. When we write programs that use these interfaces, we often do not care about how the function is actually implemented, but are just interested about the input and output values. In a word, abstraction is about the overall idea.

Implementation: This is where we actually care about how something is done "under the hood". We have to consider how our code's speed and efficiency is determined by the realities of the hardware (e.g. locality is something that needs to be taken into account). There are usually many ways to solve a problem, and implementation is all about which one is the best and how we do it.

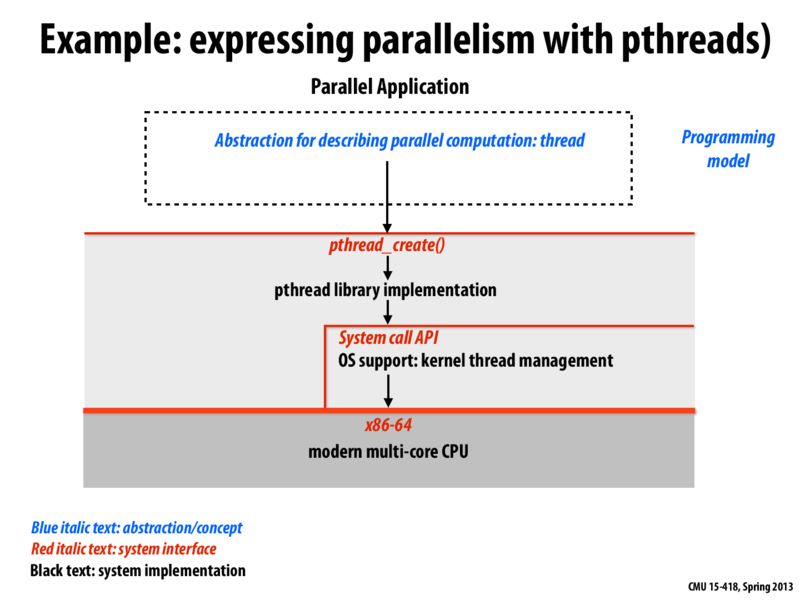

The implementation can contain calls to interfaces of another API intersecting abstraction and implementation. Figure 1 shows an example of this idea using threads as our abstraction, the pthread library as our interface, and the chain of events that occur when calling a function like pthread_create() as our implementation. Notice how this chain switches between interface calls and implementation details.

Why We Care

In this course, we should make sure to know the distinction between abstraction and implementation when talking about our code. It is important to know recognize and differentiate between them so we can reason about our code effectively. We want to separate abstraction and implementation when figuring out what and how each part of the code makes it efficient and contributes to speedup.

Let's Do An Example: ISPC

The best way to talk about this is in terms of an example we all know. In lectures and the homework we have been using ISPC as a way for us to parallelize programs. Let's discuss the abstraction and implementation portions of ISPC.

Abstraction: For ISPC, our abstraction is pretty simple. We have a set of functions and declarations that we can use to tell our compiler which parts of our execution are independent. We can call the ISPC functions from our normal C/C++ code and think about the inputs and outputs of the function as if the code were written in the same language. As a part of this abstraction, we have the notion of spawning "gangs" of program instances which will run the ISPC code in parallel. Note that at this point we do not care how the code is executed in parallel, just that it does. When an ISPC function call returns, we are guaranteed that all instances have completed computation. This is a somewhat analogous (but should not be confused with) pthread_create() and pthread_join().

Implementation: Here is where we actually care about how the code is compiled and linked with the C/C++ code that calls our ISPC functions. The ISPC code is compiled into a .o file which is then linked to the compiled C/C++ code just like any other object file. The assembly in this file tells the processor that we want to use vector wide computations using registers that are capable of handling these vector computations. The width of the vector is determined at compile time and varies with different architectures. The machines in GHC 5201/5205 support SSE4 instructions, meaning they use 4-wide vectors. Most newer machines support AVX instructions which are 8-wide. Some Syntax:

Here is a review of some of the syntax and constructs in ISPC:

export: compiles the function to allow it to be called from C/C++ code.uniform: variable declaration which indicates that the data stored in that variable will be constant across all program instances in a given task.programIndex: a variable which is automatically defined in each ISPC program. This is a similar concept to athreadIdif we were talking about threads, and provides the programmer a way to identify which program instance is running.programCount: another variable which is in scope for all ISPC programs. This one is similar in concept tonumThreadswhen discussing multi-threading, and stores the number of total program instances running for a given piece of code.foreach: each iteration inside of a foreach loop is independent across all instances. A call to foreach tells ISPC to handle program instance scheduling. (QUESTION: Write two examples of a loop, one usingforeachand one usingprogramIndexandprogramCountin the comments)task: we launch multiple tasks to tell the compiler that we would like to extend the ISPC code to run across multiple cores in the system. According to Intel: "one should launch many more tasks than there are processors in the system to ensure good load-balancing, but not so many that the overhead of scheduling and running tasks dominates the computation".

So, are these constructs an abstraction or implementation? To answer this, we have to ask ourselves: do constructs give us information about how the resulting parallelization is implemented, or do they give us an interface to utilize that parallelization? It should be pretty clear that the latter is the case here, as we have only been given a portion of the interface for ISPC. We should also keep in mind that the actual abstraction here is about the fact that we have something that allows us to parallelize our code. The interface is the set of defined constructs that allow us to utilize these constructs, and the implementation is how this code will actually be compiled.

Another Example: sin(x)

Let's go back to the sin(x) example from lecture.

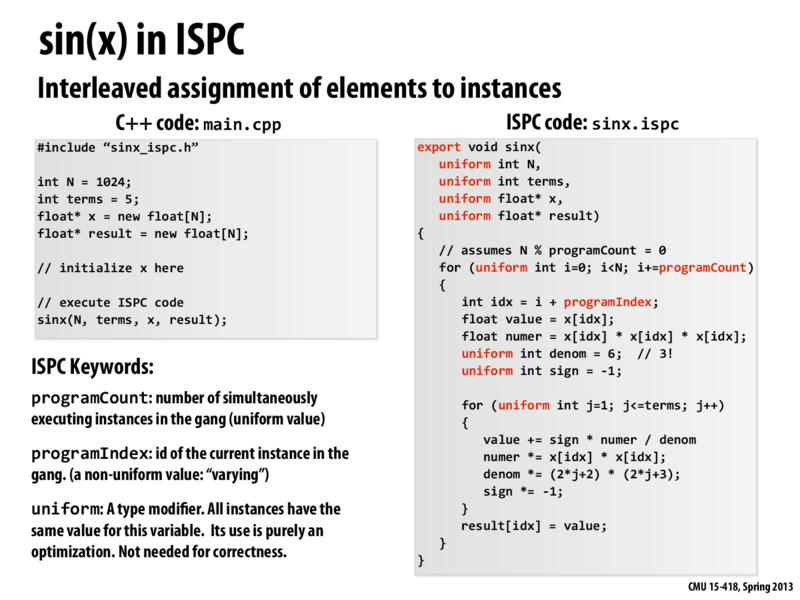

There were a few versions of this code that we discussed, so we'll revisit them to talk about how each one works. We begin with what we will refer to as the "interleaved" sin(x) code.

Let's look at the outer for loop: we loop through all values of i from 0 to N, but increment i by the programCount. This splits the work of computing the sin(x) into chunks of size programCount. If we assume that we are running with a processor that supports AVX instructions, our programCount in this case would be 8. For every 8 values we want to compute the sin for, we give one of those values to each of the 8 ALUs to process it. We then continue to the next set of 8 values and do the same thing. This continues until all values have been computed.

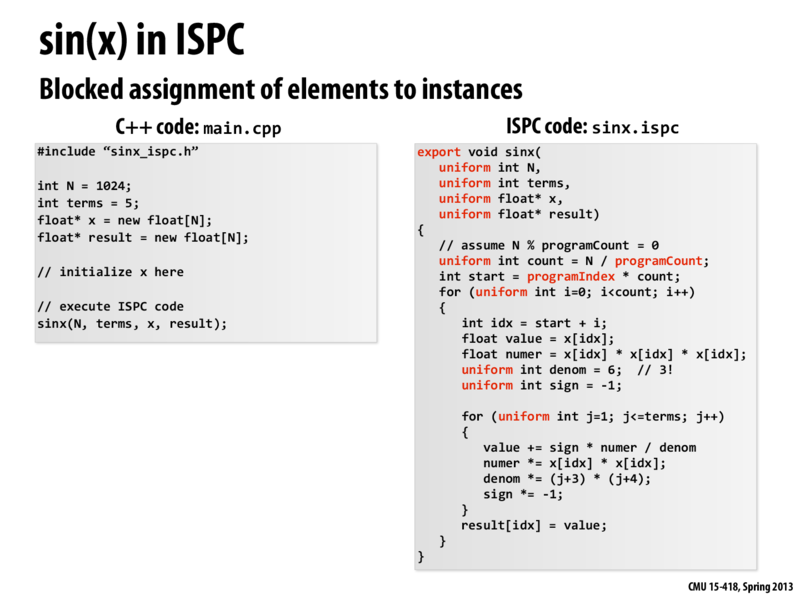

Now lets look at what we will call the "chunked" sin(x) code.

Instead of splitting the work into a bunch of small blocks of size 8, this code will break up the work into 8 large groups. Each program instance will be given a different chunk to compute.

The interleaved and chunk functions are two different implementations of sin(x) which solve the same problem, so which do we prefer? The simplest way to think about it is that the interleaved implementation is preferred because we can use contiguous memory chunks for the different program instances. We also know that each program instance executes the same command together in lockstep, further improving the speed of the interleaved version. The chunked implementation is slower because we are pulling large chunks from memory on each step as opposed to pulling one at a time and splitting it across instances. This isn't exactly what is happening though. The real reason for the speedup has to do with the fact that the gather and scatter operations are very expensive. Think about how the chunked code will write it's output to memory. In order for it to be put back in the correct position, we will most likely need to run a scatter operation. Running this operation every time we enter the loop will be very expensive and greatly reduce our speed. The interleaved code will not have this dependency since memory is in the same contiguous block, and thus only relies on gather operations.

Questions

- What are some restrictions imposed by abstractions? What are best practices?

- How would you explain the abstraction and implementation of shared address space?

- What is the difference between an ISPC task and pthread abstractions? Give a high-level definition of each, and clearly explain how tasks work.

- Say we have a two large arrays A and B (of the same length) and we want to calculate the sum of each index into a new array C. We want to make this program efficient by parallelization. What would be the initial abstraction and implementation of this program? Keep in mind that it will be using for loops most likely, so how would you construct these loops and where would you implement the parallelization?

ISPC task is more abstract. It does not specify how each task is assigned to processors core. It tell the compiler which part can be run on parallel. Pthread is more concrete. The programmer needs to specify how tasks are mapped to threads.

This comment was marked helpful 0 times.