Due: Thursday Feb 13, 11:59PM EST

100 points total

Overview

In this assignment you will write a parallel renderer in CUDA that draws colored circles. While this renderer is very simple, parallelizing the renderer will require you to design and implement data structures that can be efficiently constructed and manipulated in parallel. This is a challenging assignment so you are advised to start early. Seriously, you are advised to start early. Good luck!

Environment Setup

This assignment requires an NVIDIA GPU with CUDA compute capability 2.0. The following lab machines have adequate GPUs:

- The machines in GHC 5205 (

ghc51-76.ghc.andrew.cmu.edu) have NVIDIA GTX 480 GPUs. The capabilities of this chip were described in lecture. Also, Table F.1 in the CUDA C Programming Guide is a handy reference for the maximum number of CUDA threads per thread block, size of thread block, shared memory, etc. The GTX 480 GPUs support CUDA compute capability 2.0. ghc01.ghc.andrew.cmu.educontains a NVIDIA GTX 650 GPU (compute capability 3.0). These machines are physically in GHC 5201.ghc02-12.ghc.andrew.cmu.educontain NVIDIA GTX 670 GPUs (compute capability 3.0)- NVIDIA GTX 680 GPUs (compute capability 3.0) are installed in

ghc13-14.ghc.andrew.cmu.edu. These are the fastest GPUs we have in the public labs on campus.

To get started:

- The NVIDIA CUDA C/C++ Compiler (NVCC) is located at

/usr/local/cuda/bin/, which you need to add to yourPATH. To add/usr/local/cuda/binto your path, executesetenv PATH /usr/local/cuda/bin:${PATH}if usingcshorexport PATH=/usr/local/cuda/bin:${PATH}if usingbash. - The CUDA shared library will be loaded at runtime. It is located at

/usr/local/cuda/lib64/, which you need to add to yourLD_LIBRARY_PATH. Executesetenv LD_LIBRARY_PATH ${LD_LIBRARY_PATH}:/usr/local/cuda/lib64/if using csh orexport LD_LIBRARY_PATH=/usr/local/cuda/lib64/:${LD_LIBRARY_PATH}if using bash. - Download the Assignment 2 starter code from the course directory:

/afs/cs.cmu.edu/academic/class/15418-s14/assignments/asst2.tgz

The CUDA C programmer's guide is an excellent reference for learning how to program in CUDA.

You can also find a large number of examples in the CUDA SDK /usr/local/cuda/C/src. In addition, there are a wealth

of CUDA tutorials and SDK examples on the web (just Google!) and on the NVIDIA developer site.

Part 1: CUDA Warm-Up 1: SAXPY (5 pts)

To gain a bit of practice writing CUDA programs your warm-up task is to re-implement the SAXPY function

from assignment 1 in CUDA. Starter code for this part of the assignment is located in the /saxpy directory

of the assignment tarball.

Please finish off the implementation of SAXPY in the function saxpyCuda in saxpy.cu. You will need to

allocate device global memory arrays and copy the contents of the host input arrays X, Y, and result into

CUDA device memory prior to performing the computation. After the CUDA computation is

complete, the result must be copied back into host memory. Please see the definition of cudaMemcpy function

in Section 3.2.2 of the Programmer's Guide.

As part of your implementation, add timers around the CUDA kernel invocation in saxpyCuda. Your

additional timing measurement should not include the time to transfer data to and from device memory

(just the time to execute the computation). Note that CUDA kernel's execution on the GPU is asynchronous with the main

application thread running on the CPU. Therefore, you will want to place a call to cudaThreadSynchronize following the

kernel call to wait for completion of all CUDA work. This call to cudaThreadSynchronize should return before stopping the timer.

Question 1. What performance do you observe compared to the sequential CPU-based implementation of SAXPY (recall program 5 from Assignment 1)? Compare and explain the difference between the results provided by two sets of timers (the timer you added and the timer that was already in the provided starter code). Are the bandwidth values observed roughly consistent with the reported bandwidths available to the different components of the machine? (Hint: You should use the web to track down the memory bandwidth of an NVIDIA GTX 480 GPU, and the maximum transfer speed of the computer's PCIe-x16 bus)

Part 2: CUDA Warm-Up 2: Parallel Prefix-Sum (10 pts)

Now that you're familiar with the basic structure and layout of CUDA programs,

as a second exercise you are asked to come up with parallel implementation of the function find_repeats which, given a list of integers A, returns a list of

all indices i for which A[i] == A[i+1].

For example, given the array {1,2,2,1,1,1,3,5,3,3}, your program should output the array {1,3,4,8}.

Exclusive Prefix Sum

We want you to implement find_repeats by first implementing parallel exclusive prefix-sum operation (which you may remember as scan from 15-210).

Exlusive prefix sum takes an array A and produces a new array output that has, at each index i, the sum of all elements up to but not including A[i]. For example, given the array A={1,4,6,8,2}, the output of exclusive prefix sum output={0,1,5,11,19}.

(http://www.cs.cmu.edu/~15210/lectures/sequences.pdf) A recursive implementation should be familiar to you from 15-210. As a review (or for those that did not take 15-210), the following code is a C implementation of a work-efficient, parallel implementation of scan. In addition, details on prefix-sum (and its more general relative, scan) can be found in in the 15-210 lecture notes, Section 6.2. Note: Some of you may wish to skip the following recursive implementation and jump to the iterative version below.

void exclusive_scan_recursive(int* start, int* end, int* output, int* scratch)

{

int N = end - start;

if (N == 0) return;

else if (N == 1)

{

output[0] = 0;

return;

}

// sum pairs in parallel.

for (int i = 0; i < N/2; i++)

output[i] = start[2*i] + start[2*i+1];

// prefix sum on the compacted array.

exclusive_scan_recursive(output, output + N/2, scratch, scratch + (N/2));

// finally, update the odd values in parallel.

for (int i = 0; i < N; i++)

{

output[i] = scratch[i/2];

if (i % 2)

output[i] += start[i-1];

}

}

While the above code expresses our intent well, it is not particularly amenable to a CUDA implementation. The GTX 480 GPUs on the GHC machines do not not even support recursion in CUDA! Therefore, we can unwind the recursion tree and express our algorithm in an iterative manner. The following code should show you how one traverses the recursion tree and unwind it. Luckily for us we do not actually even need a stack to express our code.

The following "C-like" code is an iterative version of scan. We use parallel_for to indicate potentially parallel loops. You might also want to take a look at Kayvon's notes on exclusive scan.

void exclusive_scan_iterative(int* start, int* end, int* output)

{

int N = end - start;

memmove(output, start, N*sizeof(int));

// upsweep phase.

for (int twod = 1; twod < N; twod*=2)

{

int twod1 = twod*2;

parallel_for (int i = 0; i < N; i += twod1)

{

output[i+twod1-1] += output[i+twod-1];

}

}

output[N-1] = 0;

// downsweep phase.

for (int twod = N/2; twod >= 1; twod /= 2)

{

int twod1 = twod*2;

parallel_for (int i = 0; i < N; i += twod1)

{

int t = output[i+twod-1];

output[i+twod-1] = output[i+twod1-1];

output[i+twod1-1] += t; // change twod1 to twod to reverse prefix sum.

}

}

}

You are welcome to use this general algorithm to implement a version of parallel prefix sum in CUDA.

You must implement exclusive_scan function in scan/scan.cu. Your implementation will

consist of both host and device code. The implementation will require multiple kernel launches.

Implementing Find Repeated Using Prefix Sum

Once you have written exclusive_scan, you should implement the find_repeats function in scan/scan.cu.

This will involve writing more device code, in addition to one or more calls to exclusive_scan. Your code

should write the list of repeated elements into the provided output pointer (in device memory), and then return the

size of the output list.

Grading: We will test your code for correctness and performance on random input arrays.

For reference, a scan score table is provided below, showing the performance of a simple CUDA implementation on the Gates 5205 machines. For full credit you should be within 20% of the reference solution. However, you should note that this is a very generous timing requirement and do not be afraid to improve the performance of your scan implementation.

------------

Scan score table (timing of find_repeats):

------------

-------------------------------------------------------------------------

| Element count | Serial Time | Reference Time | Your Time (T) |

-------------------------------------------------------------------------

| 10000 | 0.032 | 0.354 | |

| 100000 | 0.386 | 0.570 | |

| 1000000 | 3.307 | 1.484 | |

| 2000000 | 6.584 | 2.393 | |

-------------------------------------------------------------------------

| Total score: |

-------------------------------------------------------------------------

Test Harness: By default, the test harness runs on a pseudo-randomly generated array that is the same every time

the program is run, in order to aid in debugging. You can pass the argument -i random to run on a random array - we

will do this when grading. We encourage you to come up with alternate inputs to your program to help you evaluate it.

You can also use the -n <size> option to change the length of the input array.

The argument --thrust will use the Thrust Library's implementation of exclusive scan. Up to two points of extra credit for anyone that can create an implementation is competitive with Thrust.

Part 3: A Simple Circle Renderer (85 pts)

Now for the real show!

The directory /render of the assignment starter code contains an implementation of renderer that draws colored

circles. Build the code, and run the render with the following command line: ./render rgb. You will see

an image of three circles appear on screen ('q' closes the window). Now run the renderer with the command

line ./render snow. You should see an animation of falling snow.

The assignment starter code contains two versions of the renderer: a sequential, single-threaded C++

reference implementation, implemented in refRenderer.cpp, and an incorrect parallel CUDA implementation in

cudaRenderer.cpp.

Renderer Overview

We encourage you to familiarize yourself with the structure of the renderer codebase by inspecting the reference

implementation in refRenderer.cpp. The method setup is called prior to rendering the first frame. In your CUDA-accelerated

renderer, this method will likely contain all your renderer initialization code (allocating buffers, etc). render

is called each frame and is responsible for drawing all circles into the output image. The other main function of

the renderer, advanceAnimation, is also invoked once per frame. It updates circle positions and velocities.

You will not need to modify advanceAnimation in this assignment.

The renderer accepts an array of circles (3D position, velocity, radius, color) as input. The basic sequential algorithm for rendering each frame is:

Clear image

for each circle

update position and velocity

for each circle

compute screen bounding box

for all pixels in bounding box

compute pixel center point

if center point is within the circle

compute color of circle at point

blend contribution of circle into image for this pixel

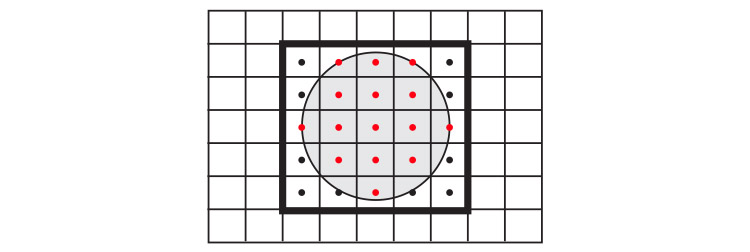

Figure 2 illustrates the basic algorithm for computing circle-pixel coverage using point-in-circle tests. Notice that a circle contributes color to an output pixel only if the pixel's center lies within the circle.

CUDA Renderer

After familiarizing yourself with the circle rendering algorithm as implemented in the reference code, now

study the CUDA implementation of the renderer provided in cudaRenderer.cu. You can run the CUDA

implementation of the renderer using the --renderer cuda program option.

The provided CUDA implementation parallelizes computation across all input circles, assigning one circle to each CUDA thread. While this CUDA implementation is a complete implementation of the mathematics of a circle renderer, it contains several major errors that you will fix in this assignment. Specifically: the current implementation does not ensure image update is an atomic operation and it does not preserve the required order of image updates (the ordering requirement will be described below).

Renderer Requirements

Your parallel CUDA renderer implementation must maintain two invariants that are preserved trivially in the sequential implementation.

- Atomicity: All image update operations must be atomic. The critical region includes reading the four 32-bit floating-point values (the pixel's rgba color), blending the contribution of the current circle with the current image value, and then writing the pixel's color back to memory.

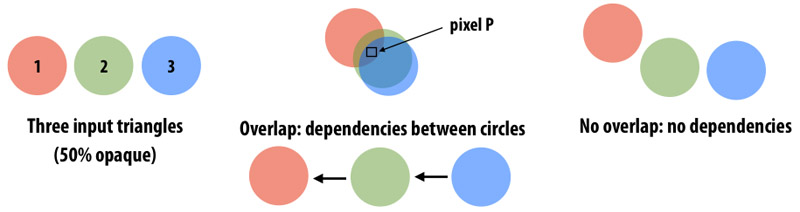

- Order: Your renderer must perform updates to an image pixel in circle input order. That is, if circle 1 and circle 2 both contribute to pixel P, any image updates to P due to circle 1 must be applied to the image before updates to P due to circle 2. As discussed in class, preserving the ordering requirement allows for correct rendering of transparent surfaces. (It has a number of other benefits for graphics systems. If curious, talk to Kayvon.) A key observation is that the definition of order only specifies the order of updates to the same pixel. Thus, as shown in Figure 3, there are no ordering requirements between circles that do not contribute to the same pixel. These circles can be processed independently.

Since the provided CUDA implementation does not satisfy these requirements, the result of not correctly respecting order can be seen by running the CUDA renderer implementation on the rgb and circles scenes. You will see horizontal streaks through the resulting images. These streaks will change with each frame.

What You Need To Do

Your job is to write the fastest, correct CUDA renderer implementation you can. You may take any approach you see fit, but your renderer must adhere to the atomicity and order requirements specified above. A solution that does not meet both requirements will be given no more than 10 points on part 2 of the assignment. We have already given you such a solution!

A good place to start would be to read through cudaRenderer.cu and convince yourself that it does not meet the correctness

requirement. To visually see the effect of violation of above two requirements, compile the program with make.

Then run ./render -r cuda rgb which should display the three circles image. Horizontal streaks through the generated image

show the incorrect behavior. Compare this image with the image generated by sequential code by running

./render rgb.

Following are some of the options to ./render:

-b --bench START:END Benchmark mode, do not create display. Time frames from START to END -c --check Runs both sequential and cuda versions and checks correctness of cuda version -f --file FILENAME Dump frames in benchmark mode (FILENAME_xxxx.ppm) -r --renderer WHICH Select renderer: WHICH=ref or cuda -s --size INT Make rendered imagex pixels -? --help Prints information about switches mentioned here.

Checker code: To detect correctness of the program, render has a convenient --check option. This option runs the sequential version of the reference CPU renderer along with your CUDA renderer and then compares the resulting images to ensure correctness. The time taken by your CUDA renderer implementation is also printed.

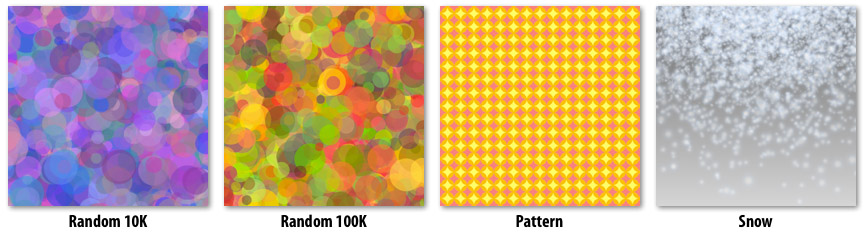

We provide are total of five circle datasets you will be graded on. To check the correctness and performance score of your code, run make check in the /render directory. If you run it on the starter code, the program will print a table like the following:

------------

Score table:

------------

-------------------------------------------------------------------------------------------

| Scene Name | Naive Time (Tn) | Fast Time (To) | Your Time (T) | Score |

-------------------------------------------------------------------------------------------

| rgb | 4.5 | 0.35 | 127.0251 (F) | 0 |

| rand10k | 230 | 10.85 | 68.5904 (F) | 0 |

| rand100k | 2305 | 110.80 | 751.5714 (F) | 0 |

| pattern | 27 | 0.88 | 4.1546 (F) | 0 |

| snowsingle | 2277 | 49.67 | 16.1984 (F) | 0 |

-------------------------------------------------------------------------------------------

| Total score: | 0/65 |

-------------------------------------------------------------------------------------------

In the above table, "naive time" is the performance of a very naive, but correct, CUDA solution for your current machine. "Fast time" is the performance of a good solution on your current machine. "Your time" is the performance of your current CUDA renderer solution. Your grade will depend on the performance of your implementation compared to these reference implementations (see Grading Guidelines). Note that the reference times are computed on an NVIDIA GTX 480 GPU (specifically, on ghc75.ghc.andrew.cmu.edu), so you should compare your implementation's performance to the reference times only if you are running on a GTX 480.

Along with your code, we would like you to hand in a clear, high-level description of how your implementation works as well as a brief description of how you arrived at this solution. Specifically address approaches you tried along the way, and how you went about determining how to optimize your code (For example, what measurements did you perform to guide your optimization efforts?).

Aspects of your work that you should mention in the write-up include:

- Include both partners names and andrew id's at the top of your write-up.

- Replicate the score table generated for your solution.

- Describe how you decomposed the problem and how you assigned work to CUDA thread blocks and threads (and maybe even warps).

- Describe where synchronization occurs in your solution.

- What, if any, steps did you take to reduce communication requirements (e.g., synchronization or main memory bandwidth requirements)?

- Briefly describe how you arrived at your final solution. What other approaches did you try along the way. What was wrong with them?

Grading Guidelines

- The write-up for the assignment is worth 15 points.

Your implementation is worth 65 points. These are equally divided into 13 points per scene as follows:

- 2 correctness points per scene.

- 11 performance points per scene (only obtainable if the solution is correct). The score table has two reference times: Tn (Naive Time) and To (Good Time). These times give the performance of two functionally correct CUDA renderer solutions. One of them is very naive and other implements a reasonable set of optimizations.

- No performance points will be given for solutions having time (T) worse than Tn.

- Full performance points will be given for solution within 20% of the optimized solution ( T < 1.20 * To )

For other values of T (for 1.20 To <= T < Tn), your performance score on scale 1 to 11 will be calculated as :

$Score = RoundUp \Big(\dfrac{T_o}{T} * 11\Big)$

Up to five points extra credit (instructor discretion) for solutions that achieve significantly greater performance than required. Your write up must clearly explain your approach thoroughly.

- Up to five points extra credit (instructor discretion) for a high-quality parallel CPU-only renderer implementation that achieves good utilization of all cores and SIMD vector units of the cores. Feel free to use any tools at your disposal (e.g., SIMD intrinsics, ISPC, pthreads). To receive credit you should analyze the performance of your GPU and CPU-based solutions and discuss the reasons for differences in implementation choices made.

Assignment Tips and Hints

Below are a set of tips and hints compiled from previous years. Note that there are various ways to implement your renderer and not all hints may apply to your approach.

- To facilitate remote development and benchmarking, we have created a

--benchmarkoption to the render program. This mode does not open a display, and instead runs the renderer for the specified number of frames. - When in benchmark mode, the

--fileoption sets the base file name for PPM images created at each frame. Created files are basenamexxxx.ppm. No PPM files are created if the--fileoption is not used. - There are two potential axes of parallelism in this assignment. One axis is parallelism across pixels another is parallelism across circles (provided the ordering requirement is respected for overlapping circles).

- The prefix-sum operation provided in

exclusiveScan.cu_inlmay be valuable to you on this assignment (not all solutions may choose to use it). See the simple description of a prefix-sum here. We have provided an implementation of an exclusive prefix-sum on a power-of-two-sized array in shared memory. The provided code does not work on non-power-of-two inputs. IT ALSO REQUIRES THAT THE NUMBER OF THREADS IN THE THREAD BLOCK BE THE SIZE OF THE ARRAY. - You are allowed to use the Thrust library in your implementation if you so choose. Thrust is not necessary to achieve the performance of the optimized CUDA reference implementations.

- Is there data reuse in the renderer? What can be done to exploit this reuse?

- How will you ensure atomicity of image update since there is no CUDA language primitive that performs the logic of the image update operation atomically? Constructing a lock out of global memory atomic operations is one solution, but keep in mind that even if your image update is atomic, the updates must be performed in the required order. We suggest that you think about ensuring order in your parallel solution first, and only then consider the atomicity problem (if it still exists at all) in your solution.

- If you are having difficulty debugging your CUDA code, you can use

printfdirectly from device code if you use a sufficiently new GPU and CUDA library: see this brief guide. - If you find yourself with free time, have fun making your own scenes. A fireworks demo is just asking to be made!

3.4 Hand-in Instructions

Please submit your work in the directory:

/afs/cs/academic/class/15418-s14-users/<ANDREW ID>/asst2

Remember: You need to execute aklog cs.cmu.edu to avoid permission issues.

- Please submit your writeup as the file

writeup.pdf. - Please submit your code in the file

code.tgz. Just submit your full assignment 2 source tree. To keep submission sizes small, please do amake cleanin the program directories prior to creating the archive, and remove any residual output images, etc. Before submitting the source files, make sure that all code is compilable and runnable! We should be able to simply untarcode.tgz, make, then execute your programs in/saxpy,/scan, and/renderwithout manual intervention.

Our grading scripts will rerun the checker code allowing us to verify your score matches what you submitted in the writeup.pdf. We might also try to run your code on other datasets to further examine its correctness.

I believe the table mentioned in the first bullet point under 'Environment Setup' is actually Table G.1, not F.1.

This comment was marked helpful 0 times.